On this page

Overview

This tutorial will enable any developer or architect to quickly deploy a demo system using the Free Community Edition of the Curity Identity Server. This will be done by running four simple bash scripts:

./1-create-cluster.sh./2-create-certs.sh./3-deploy-postgres.sh./4-deploy-idsvr.sh

No prior Kubernetes experience is needed, and the result will be a system with real world URLs and a working example application. This type of initial end-to-end setup can be useful when designing deployment solutions:

Later, once you are finished with the demo installation, you can free all resources by running the following script:

./5-delete-cluster.sh

Design the Deployment

Software companies often start their OAuth infrastructure by designing base URLs that reflect their company and brand names. This tutorial uses a subdomain based approach and the following URLs:

| Base URL | Description |

|---|---|

https://login.curity.local | The URL for the Identity Server, which users see when login screens are presented |

https://admin.curity.local | An internal URL for the Curity Identity Server Admin UI |

The Curity Identity Server supports some advanced deployment scenarios but this tutorial uses a fairly standard setup consisting of the following components:

| Server Role | Number of Containers |

|---|---|

| Identity Server Admin Node | 1 |

| Identity Server Runtime Node | 2 |

| Database Server | 1 |

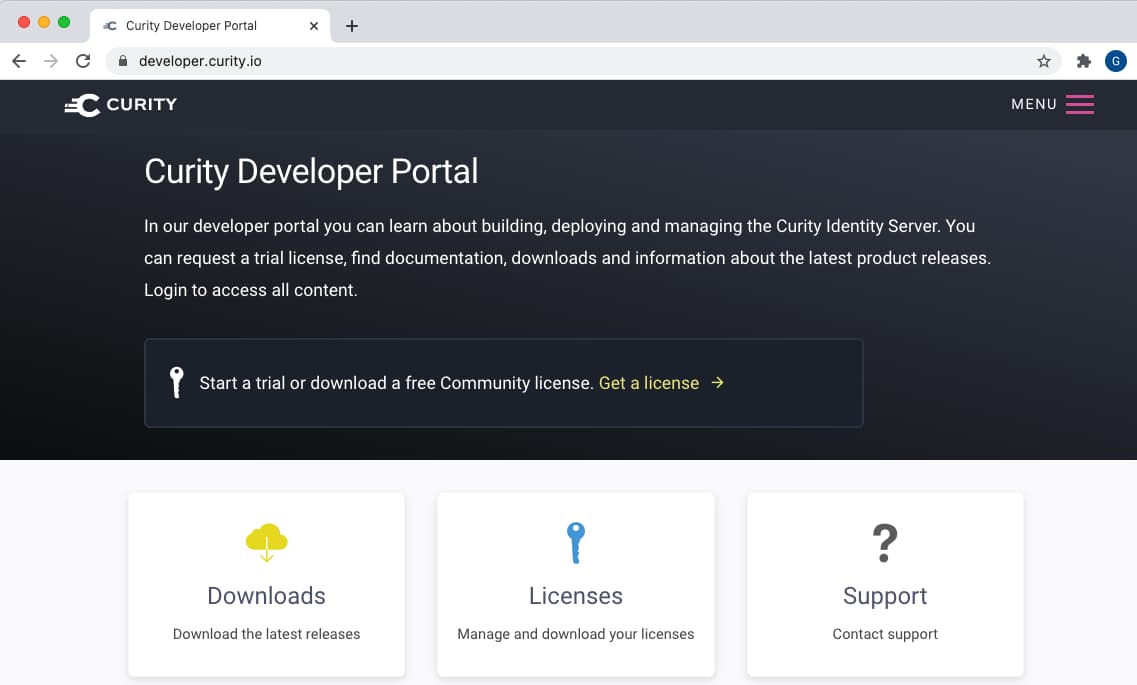

Get a License File

For the deployed system to work you will need a license file. Any developer new to Curity can quickly sign in to the Developer Portal with their GitHub account to get one:

Create the Kubernetes Cluster

Install Prerequisites

The system can be deployed on any workstation via bash scripts, and has the following prerequisites:

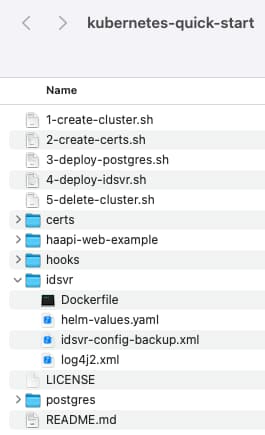

Get the Kubernetes Helper Scripts

Clone the GitHub repository using the link at the top of this page, which contains the following resources:

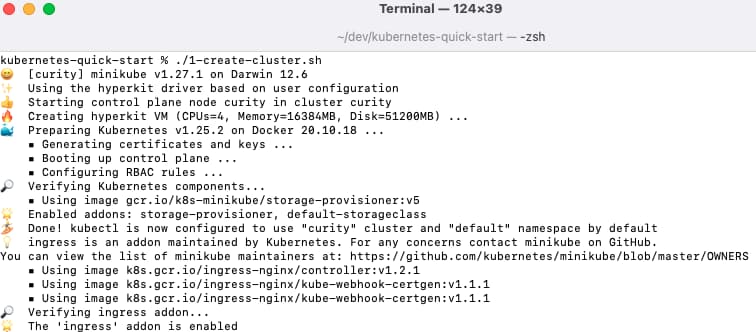

Run the Cluster Install Script

View the create-cluster.sh script to understand what it does, and then run it as follows. Once complete the cluster can be administered using standard kubectl commands.

Minikube Profiles

The Kubernetes cluster runs on minikube, the default system for Kubernetes development. It also uses minikube profiles, which are useful for switching between multiple 'deployed systems' on a development computer.

- minikube start --cpus=2 --memory=8192 --disk-size=50g --profile curity- minikube stop --profile curity- minikube delete --profile curity

By default the scripts use moderate resources from the local computer. Some follow on tutorials add large additional systems to the cluster, such as Prometheus or Elasticsearch. If running those tutorials, consider increasing the resources accordingly, to use more CPU and RAM.

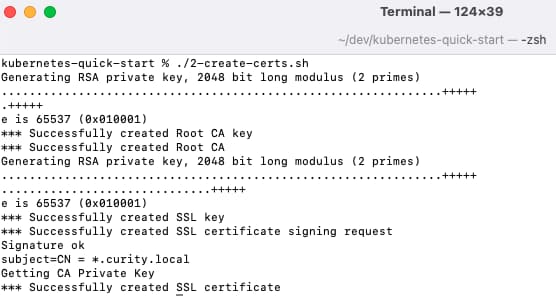

Create SSL Certificates

Next run the 2-create-certs.sh script, which will use OpenSSL to create some test certificates for the external URLs of the demo system:

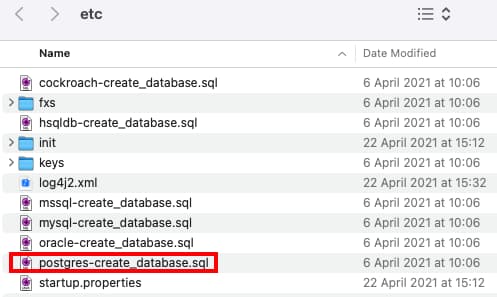

Deploy an Identity Data Store

The Curity Identity Server can save its data to a number of database systems, and this tutorial uses PostgreSQL. You can download and unzip the latest Curity release from the developer portal and get database creation scripts from the idsvr/etc folder:

The deployment copies in a backed-up PostgreSQL script, which creates the database schema and restores a test user account that can be used to sign into the code example. Run the following script, which will download and deploy the PostgreSQL Docker image. Later in this tutorial, SQL queries will be run against the database's OAuth data, which includes user accounts, audit data, and information about tokens and sessions.

./3-deploy-postgres.sh

Deploy the Curity Identity Server

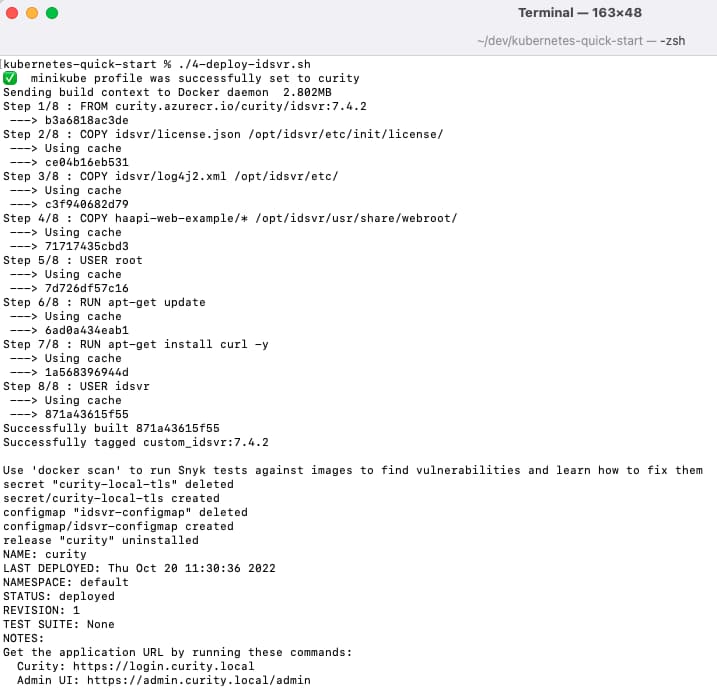

First copy the license file into the idsvr folder. When deployment is run, a custom docker image will be created, and resources will be copied into it, including the license file, some custom logging / auditing configuration, and the Hypermedia API Code Example:

FROM curity.azurecr.io/curity/idsvr:latestCOPY idsvr/license.json /opt/idsvr/etc/init/license/COPY haapi-web/* /opt/idsvr/usr/share/webroot/COPY idsvr/log4j2.xml /opt/idsvr/etc/

Run the Deployment Script

Next, run the 4-deploy-idsvr.sh script, which deploys a working configuration to the default Kubernetes namespace:

Wait for a couple of minutes for the admin and runtime nodes to be created before using the deployed URLs. To be sure that the system is in a ready state, you can wait for the pods with this command:

kubectl wait \--for=condition=ready pod \--selector=app.kubernetes.io/instance=curity \--timeout=300s

Running the Helm Chart

The Curity Identity Server consists of a number of Kubernetes components and reliable deployment is simplified via the Helm Chart. The top level settings are specified by a values file, and a preconfigured helm-values.yaml file is included. This snippet shows how a backed up configuration is provided as a Kubernetes configmap, and how the external URLs are configured as an ingress:

replicaCount: 2image:repository: custom_idsvrtag: latestpullPolicy: NeverpullSecret:networkpolicy:enabled: falsecurity:admin:logging:level: INFOruntime:logging:level: INFOconfig:uiEnabled: truepassword: Password1configuration:- configMapRef:name: idsvr-configmapitems:- key: main-configpath: idsvr-config-backup.xmlingress:annotations:kubernetes.io/ingress.class: nginxnginx.ingress.kubernetes.io/backend-protocol: "HTTPS"runtime:enabled: truehost: login.curity.localsecretName: curity-local-tlsadmin:enabled: truehost: admin.curity.localsecretName: curity-local-tls

Understand Kubernetes YAML

By default the Helm Chart hides most of the Kubernetes details. To gain a closer understanding, it can be useful to run the following command, to view the output of the Helm chart:

helm template curity curity/idsvr --values=idsvr/helm-values.yaml

You can then redirect the results to a file, which you can inspect to study the Kubernetes resources created, and how each is configured:

---# Source: idsvr/templates/service-runtime.yamlapiVersion: v1kind: Servicemetadata:name: curity-idsvr-runtime-svclabels:app.kubernetes.io/name: idsvrhelm.sh/chart: idsvr-latestapp.kubernetes.io/instance: curityapp.kubernetes.io/managed-by: Helmrole: curity-idsvr-runtimespec:type: ClusterIPports:- port: 8443targetPort: http-portprotocol: TCPname: http-port- port: 4465targetPort: health-checkprotocol: TCPname: health-check- port: 4466targetPort: metricsprotocol: TCPname: metricsselector:app.kubernetes.io/name: idsvrapp.kubernetes.io/instance: curityrole: curity-idsvr-runtime---

Configure Local Domain Names

At this point the demo system's external URLs have been configured in Kubernetes but will not yet work from the host computer. To resolve this, first get the IP address of the minikube virtual machine:

minikube ip --profile curity

Then specify the resulting IP address against both domain names in the hosts file on the local computer, which is usually located at /etc/hosts:

192.168.64.3 admin.curity.local login.curity.local

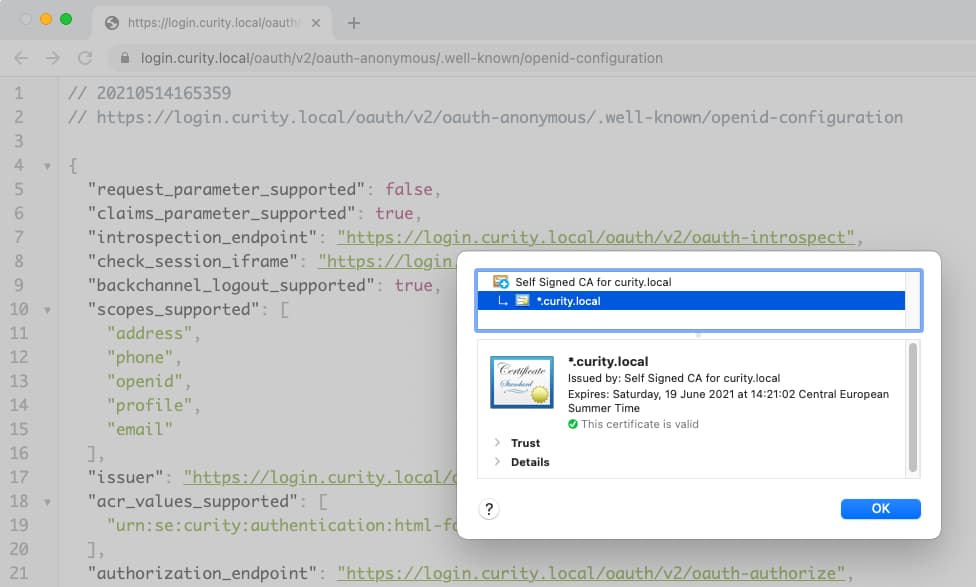

Also, trust the root authority created for the demo SSL certificates, by adding the certs/curity.local.ca.pem file to the local computer's trust store, such as the system keychain on macOS.

Use the Curity Identity Server

OpenID Connect Discovery Endpoint

The discovery endpoint is often the first endpoint that applications connect to, and for the example deployment it is at the following URL:

https://login.curity.local/oauth/v2/oauth-anonymous/.well-known/openid-configuration

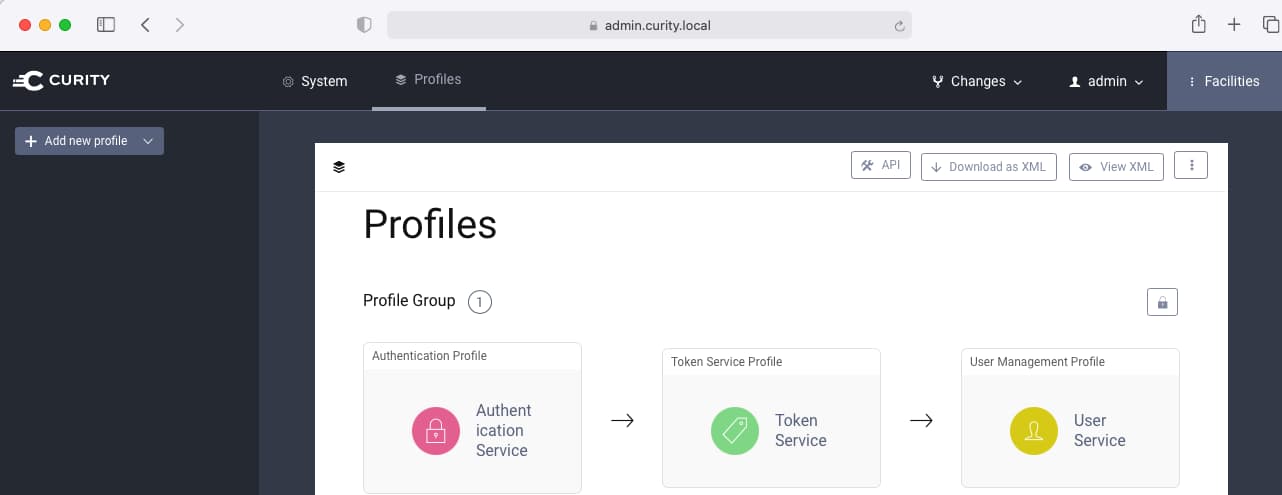

Admin UI

The Identity Server's Admin UI is then available, which provides rich options for managing authentication and token issuing behavior:

| Field | Value |

|---|---|

| Admin UI URL | https://admin.curity.local/admin |

| User Name | admin |

| Password | Password1 |

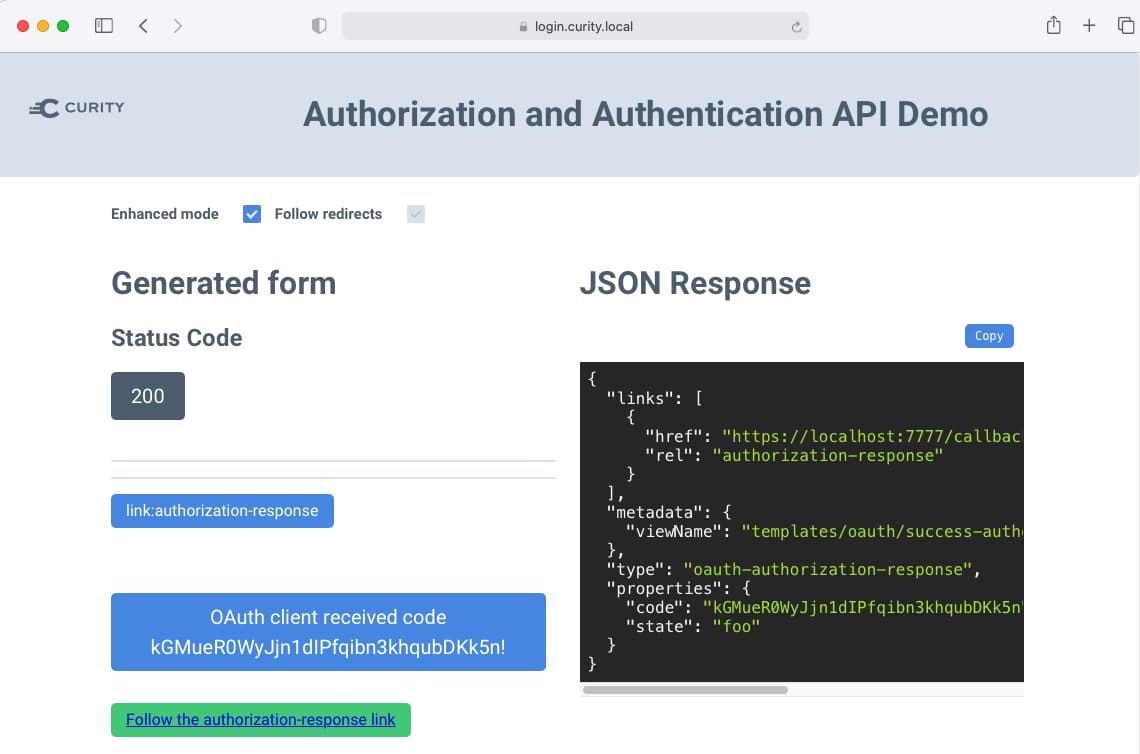

Run the Hypermedia Web Example

Next, run the example application shown at the beginning of this tutorial, and you can sign in with this test user credential:

| Field | Value |

|---|---|

| Application URL | https://login.curity.local/demo-client.html |

| User Name | john.doe |

| Password | Password1 |

Runtime URLs

The following base URLs are used by the deployed system. For development purposes, the example Curity Identity Server container includes the curl tool, which enables internal connections to be verified:

| URL | Type | Usage |

|---|---|---|

https://login.curity.local | External | The URL used by internet clients such as browsers and mobile apps for user logins and token operations |

https://admin.curity.local | External | This URL would not be exposed to the public internet |

https://curity-idsvr-runtime-svc:8443 | Internal | This URL would be called by APIs and web back ends running inside the cluster, during OAuth operations |

https://curity-idsvr-admin-svc:6749 | Internal | This URL can be called inside the cluster to test connections to the RESTCONF API |

Get a shell to a runtime node like this:

ADMIN_NODE=$(kubectl get pods -o name | grep curity-idsvr-admin)kubectl exec -it $ADMIN_NODE -- bash

Then run a command to connect to an OAuth endpoint:

curl -k 'https://curity-idsvr-runtime-svc:8443/oauth/v2/oauth-anonymous/jwks'

Get a shell to an admin node like this:

RUNTIME_NODE=$(kubectl get pods -o name | grep -m1 curity-idsvr-runtime)kubectl exec -it $RUNTIME_NODE -- bash

Then run this command to download configuration from the RESTCONF API:

curl -k -u 'admin:Password1' 'https://curity-idsvr-admin-svc:6749/admin/api/restconf/data?depth=unbounded&content=config'

Manage Identity Data

Curity Identity Server Schema

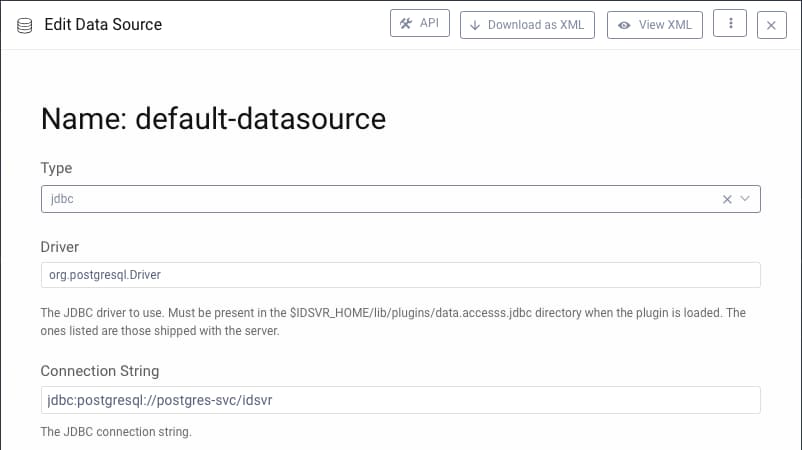

From the Admin UI you can browse to Facilities → Data Sources → default-datasource to view the details for the PostgreSQL connection:

For convenience, the example deployment also exposes the database to the host computer, to enable development queries via the psql tool. Connect to the database with this command:

export PGPASSWORD=Password1 && psql -h $(minikube ip --profile curity) -p 30432 -d idsvr -U postgres

Then get a list of tables with this command:

\dt

The database schema manages three main types of data. These can be stored in different locations if required, though the example deployment uses a single database:

| Data Type | Description |

|---|---|

| User Accounts | Users, hashed credentials and Personally Identifiable Information (PII) |

| Security State | Tokens, sessions and other data used temporarily by client applications |

| Audit Information | Information about authentication events, tokens issued, and to which areas of data |

You can run queries against the deployed end-to-end system to understand data that is written as a result of logins to the example application:

select * from audit;

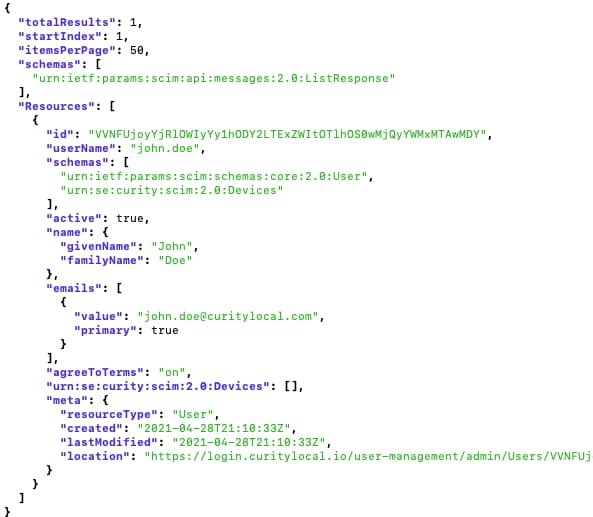

User Management via SCIM

The deployed system has an activated SCIM 2.0 endpoint, which can be called via the following script. This GET request returns a list of users stored in our SQL database, then uses the jq tool to render the response:

ACCESS_TOKEN=$(curl -s -X POST https://login.curity.local/oauth/v2/oauth-token \-H 'content-type: application/x-www-form-urlencoded' \-d 'grant_type=client_credentials' \-d 'client_id=scim-client' \-d 'client_secret=Password1' \-d 'scope=read' \| jq -r '.access_token')curl -H "Authorization: Bearer $ACCESS_TOKEN" https://login.curity.local/user-management/admin/Users | jq

Log Configuration

The Docker image includes a custom log4j2.xml file, which can be used for troubleshooting. For development purposes the root logger uses level INFO, and this can be increased to level DEBUG when troubleshooting:

<AsyncRoot level="INFO"><AppenderRef ref="rewritten-stdout"/><AppenderRef ref="request-log"/><AppenderRef ref="metrics"/></AsyncRoot>

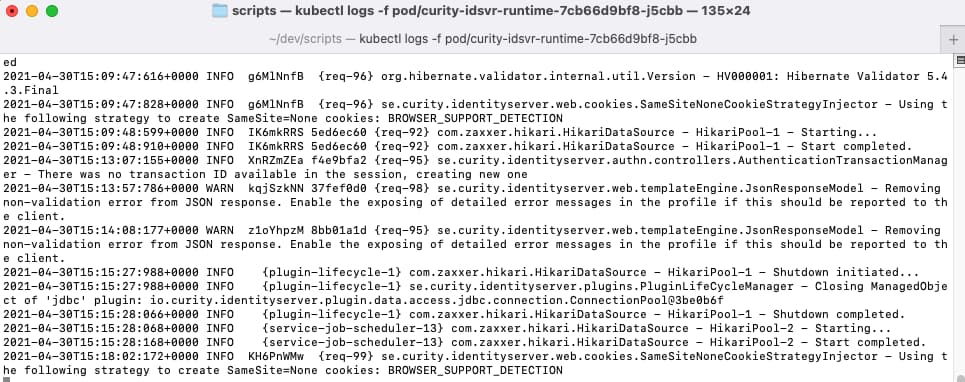

Use standard Kubernetes commands to tail logs when troubleshooting:

RUNTIME_NODE=$(kubectl get pods -o name | grep -m1 curity-idsvr-runtime)kubectl logs -f $RUNTIME_NODE

Backup of Configuration and Data

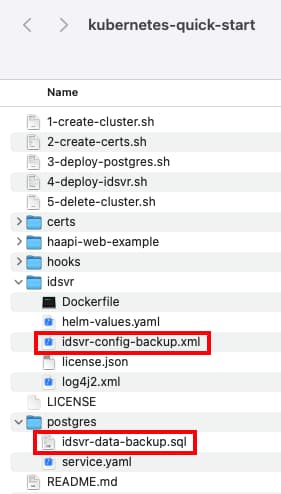

Finally, the initial system could be backed up in a basic way by periodically updating the highlighted files below:

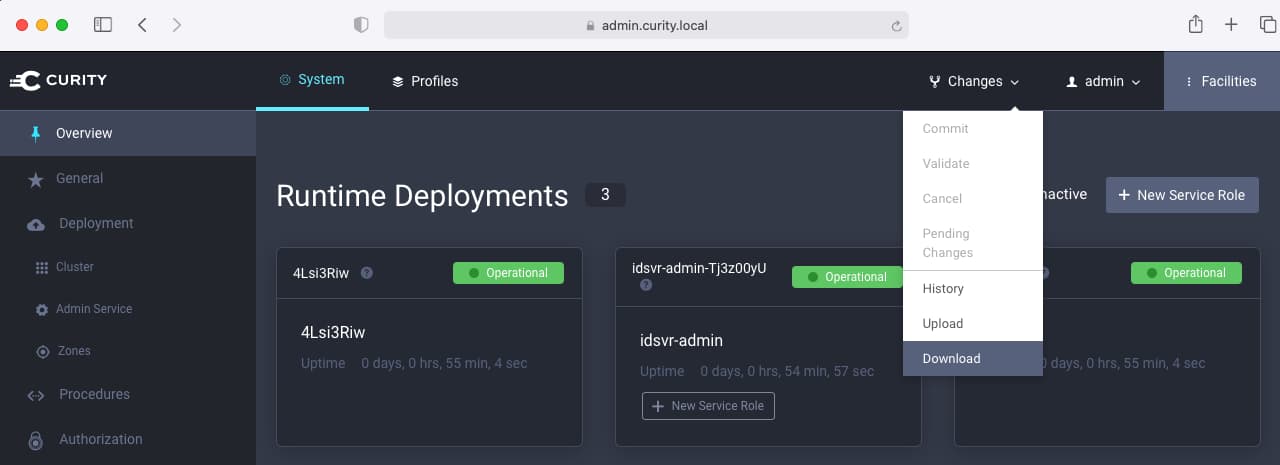

After changes are made in the Admin UI, the configuration can be backed up using the Changes → Download option, or alternatively via the REST API:

Similarly the Postgres data can be backed up from the host computer via this command:

POSTGRES_POD=$(kubectl get pods -o=name | grep postgres)kubectl exec -it $POSTGRES_POD -- bash -c "export PGPASSWORD=Password1 && pg_dump -U postgres -d idsvr" > ./postgres/idsvr-data-backup.sql

Conclusion

This tutorial quickly spun up a working load balanced setup for the Curity Identity Server, with URLs similar to those used in more complex deployments. This provides a productive start for developers, when new to the Curity Identity Server. Real world solutions also need to deploy the system down a pipeline and deal better with data management and security hardening. See the IAM Configuration Best Practices article for some recommendations when growing your deployment.

Join our Newsletter

Get the latest on identity management, API Security and authentication straight to your inbox.

Start Free Trial

Try the Curity Identity Server for Free. Get up and running in 10 minutes.

Start Free Trial