On this page

Overview

A Kubernetes cluster can be extended by installing a service mesh. Doing so can provide further options for separating infrastructure concerns from application code. Multi-container pods can then be used, consisting of both the application and a sidecar. When applications call each other inside the cluster, requests are transparently routed to other components via sidecars:

From a security viewpoint, this also provides an elegant way to ensure confidentiality for OAuth requests issued from inside the cluster. The platform can use SPIFFE based infrastructure to enable mutual TLS (mTLS) for sidecar to sidecar connections. In many use cases, applications can operate without any awareness of certificates, and simply call each other over plain HTTP URLs. The platform keeps identities short lived and automatically deals with renewing them.

Design Service Mesh Deployment

To use a service mesh, you first deploy a base Kubernetes cluster. Next, you deploy the service mesh components to the cluster. Finally, application components within the cluster can opt into the use of sidecars and mTLS. The following sections explain the main steps for enabling confidentialility of OAuth requests, when deploying the Curity Identity Server to an Istio service mesh.

Deploy a Kubernetes Cluster

This tutorial uses Kubernetes in Docker (KIND) as a Kubernetes base cluster for a development computer. A cluster is created using a simple local command:

kind create cluster --name=istio-demo --config=./cluster.yaml

The nodes for hosting pods can be declared in a cluster.xml file. The following example declares a local cluster that uses two worker nodes to host pods. Other development related settings can also be expressed in the cluster configuration:

kind: ClusterapiVersion: kind.x-k8s.io/v1alpha4nodes:- role: control-plane- role: worker- role: worker

Using a local cluster is highly useful when designing your deployments. Later, you can create the real cluster in your preferred cloud system:

The cluster creation commands vary across cloud platforms and development clusters. Deploying and configuring Istio and the Curity Identity Server then work identically across all of these systems.

Deploy Istio Components

To deploy the Istio service mesh, follow the installation guide to add further components to the cluster. The following commands can be used for a local development cluster:

curl -L https://istio.io/downloadIstio | sh -./istio-*/bin/istioctl install --set profile=demo -y

Deploy the Curity Identity Server

Use the Helm Chart to deploy the Curity Identity Server to any Kubernetes cluster. An example command is shown here, where a values file provides input parameters:

helm install curity curity/idsvr --values=helm-values.yaml --namespace curity --create-namespace

The following example values file includes options that should be set when using sidecars and mTLS with the Curity Identity Server. First, apply an Istio label to trigger the deployment of sidecars. Next, ensure that both admin and runtime nodes are configured to use plain HTTP inside the cluster:

replicaCount: 2image:repository: curity.azurecr.io/curity/idsvrtag: latestcurity:adminUiHttp: trueadmin:podLabels:sidecar.istio.io/inject: 'true'logging:level: INFOruntime:podLabels:sidecar.istio.io/inject: 'true'serviceAccount:name: curity-idsvr-service-accountlogging:level: INFOconfig:uiEnabled: truepassword: Password1skipInstall: falseingress:enabled: false

Configure a service account to be used as the SPIFFE workload identity for runtime nodes of the Curity Identity Server. Also, disable the Helm chart's default ingress handling, which only supports standard Kubernetes resources.

Enable Ingress to the Service Mesh

Service meshes use their own custom ingress controllers, which use mTLS when routing to application components. To configure an Istio ingress for the Curity Identity Server, custom resource definitions (CRDs) are used. The following example accepts internet requests over port 443 using TLS, then routes them over port 8443 to runtime nodes of the Curity Identity Server:

apiVersion: networking.istio.io/v1alpha3kind: Gatewaymetadata:name: curity-idsvr-gatewayspec:selector:istio: ingressgatewayservers:- port:number: 443name: httpsprotocol: HTTPStls:mode: SIMPLEcredentialName: curity-external-tlshosts:- login.curity.local---apiVersion: networking.istio.io/v1alpha3kind: VirtualServicemetadata:name: curity-idsvr-runtime-virtual-servicespec:hosts:- login.curity.localgateways:- curity-idsvr-gatewayhttp:- route:- destination:host: curity-idsvr-runtime-svcport:number: 8443

Activate Mutual TLS

Use a PeerAuthentication resource to instruct Istio to use mTLS for incoming OAuth connections to the Curity Identity Server. The following example selects runtime nodes of the Curity Identity Server via the Kubernetes role assigned to them by the Helm chart. Mutual TLS is then required for all OAuth requests:

apiVersion: security.istio.io/v1beta1kind: PeerAuthenticationmetadata:name: idsvr-runtime-mtlsspec:selector:matchLabels:role: curity-idsvr-runtimemtls:mode: STRICTportLevelMtls:4465:mode: DISABLE4466:mode: DISABLE

In this example, mTLS is disabled for the status and metrics endpoints of the Curity Identity Server, whose default ports are 4465 and 4466 respectively. This may be a requirement in some infrastructure setups, if your monitoring components are unable to connect to these ports via mTLS.

Example End-to-End Deployment

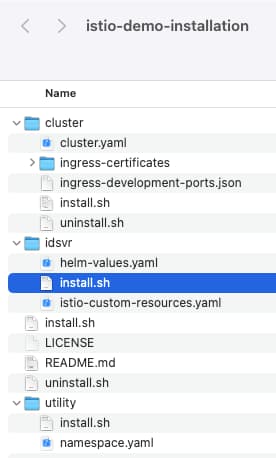

An example deployment is provided with this tutorial, which you can clone using the GitHub link:

Before running the example deployment, ensure that the following prerequisites are installed:

Deploy the System

Next, simply run the install script:

./install.sh

Wait a few minutes for all Docker images to download and deploy, then view pods:

kubectl get pod -n curity -o wide

In the example deployment, both admin and runtime nodes of the Curity Identity Server are deployed as multi-container pods. The second container is the sidecar, with a container ID of istio-proxy:

NAME READY STATUS RESTARTS AGE IP NODEcurity-idsvr-admin-84f5fd64dd-hjsd7 2/2 Running 0 108s 10.244.2.4 istio-demo-worker2curity-idsvr-runtime-87cdff864-2dxrs 2/2 Running 0 108s 10.244.2.5 istio-demo-worker2curity-idsvr-runtime-87cdff864-fjzx9 2/2 Running 0 108s 10.244.1.5 istio-demo-workerpostgres-56798c9976-wrl4w 1/1 Running 0 8m4s 10.244.2.3 istio-demo-worker2

Later, when you are finished with the cluster, run the following command to free all resources:

./uninstall.sh

Use the Deployed System

Before accessing deployed Curity Identity Server endpoints from the local computer, add these development domain names to the local computer's hosts file:

127.0.0.1 login.curity.local admin.curity.local

The deployment uses openssl to create some ingress SSL certificates for the development installation. In a cloud deployment this step would not be necessary, and you will typically use public SSL certificates provided by the cloud platform, or a provider such as Let's Encrypt.

To ensure that your browser trusts the development certificate, configure it to trust the root certificate authority created at the following location. This can usually be done by importing the root CA into your operating system's trust store.

cluster/ingress-certificates/curity.external.ca.pem

Next, log in to the admin UI at https://admin.curity.local/admin, using the admin user and the password supplied in the Helm chart. Then complete the initial setup wizard when prompted, by uploading a license file for the Curity Identity Server, then accepting all default options.

Make Internal OAuth Requests

The deployment includes a utility sleep pod from the Istio code examples. This can be used to simulate a microservice or other backend component, that connects to OAuth endpoints inside the cluster. Run the following curl request, to make a plain HTTP connection to an OAuth endpoint:

APPLICATION_POD="$(kubectl -n applications get pod -o name)"kubectl -n applications exec $APPLICATION_POD -- \curl -s http://curity-idsvr-runtime-svc.curity:8443/oauth/v2/oauth-anonymous/jwks

This command is routed via the utility pod's Istio sidecar, which then upgrades the connection to use mutual TLS. To see server certificate details when connecting to the Curity Identity Server, run the following command:

kubectl -n applications exec $APPLICATION_POD -c istio-proxy \-- openssl s_client -showcerts \-connect curity-idsvr-runtime-svc.curity:8443 \-CAfile /var/run/secrets/istio/root-cert.pem 2>/dev/null | \openssl x509 -in /dev/stdin -text -noout

The response includes a SPIFFE identity for runtime nodes of the Curity Identity Server:

X509v3 Subject Alternative Name:URI:spiffe://cluster.local/ns/curity/sa/curity-idsvr-service-account

Conclusion

This tutorial showed how the Curity Identity Server can be deployed to an Istio service mesh. All OAuth requests inside the cluster are then protected by mutual TLS, which ensures confidentiality. If required, you can then also use other Istio mechanisms, such as authorization policies, to further restrict access to OAuth endpoints.

Join our Newsletter

Get the latest on identity management, API Security and authentication straight to your inbox.

Start Free Trial

Try the Curity Identity Server for Free. Get up and running in 10 minutes.

Start Free Trial