Integrating Curity Identity Server with Apigee Edge

On this page

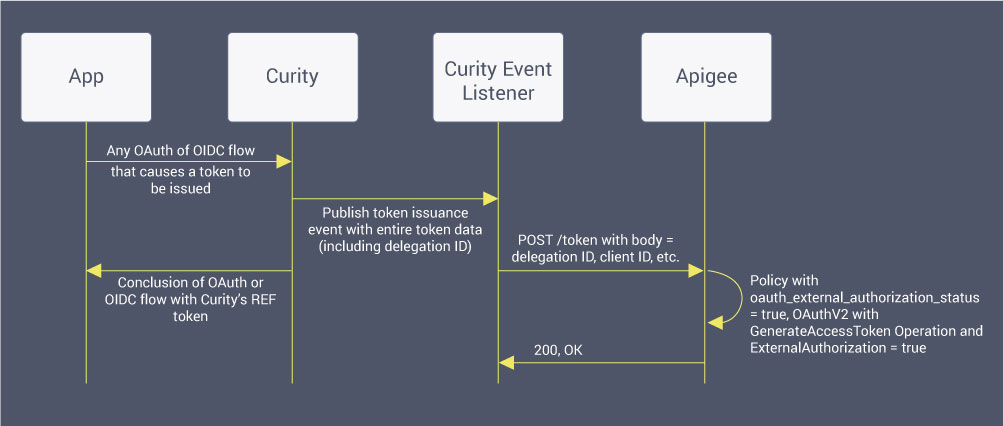

This tutorial explains how to integrate Apigee Edge with the Curity Identity Server. An overview of the API request flow is shown in the following diagram:

In this figure, the OAuth client interacts with Curity to obtain access tokens. It may use any of the standard OAuth or OpenID Connect flows, including device flow and hybrid flows. It may also use non-standard flows supported by Curity, such as the assisted token flow. With any of these, an opaque access token (a GUID or "REF token") is typically issued. This is not strictly required, but is helpful when:

- The OAuth client uses scopes that have a specific Time to Live (TTL);

- No communication between Apigee and Curity is desired when tokens are revoked at Curity; or

- The OAuth client should be unregulated and consequently it should not have access to any Personally Identifiable Information (PII).

Because the token is usually opaque, it needs to be introspected or "dereferenced" to obtain one that can be used when calling the back-end API (i.e., the service that Apigee is fronting). This internal token, or phantom token, is a JSON Web Token (JWT) and includes information about the user, the app, scopes, and other info the API needs to make a fine-grained access control decision.

Client Registration

In order for an OAuth client to obtain tokens, it must be registered with the OAuth server -- Curity. In addition, the client must be known to Apigee, so that it can report on API usage. To keep these two in sync, an API proxy is placed in front of Curity's Dynamic Client Registration (DCR) endpoint. When a new client is made in the developer portal (or elsewhere), a standards-based API call is made to this proxy. It forwards the call to Curity. If Curity replies with a 201, created, the proxy knows to also create the client in Apigee. To do this, the proxy invokes the Apigee management API using the dynamically-generated client_id from Curity. This interaction model is shown in the following diagram:

In this process, Apigee does the following in the post-flow:

- Extracts the

developerID from the request. Curity ignores this, but Apigee needs to relate the new app to a certain developer or app owner. The portal is expected to be able to determine this and include it in the request. - Extracts the

client_idandclient_namefrom Curity's DCR response (which is just JSON representing the config of the new, dynamic client, as shown below). - Makes a call to the Apigee client management API to create the new app. This requires basic authentication using a sufficient credential. It also requires three API calls to:

- Create the app and get the Apigee-generated

client_id(referred to as theconsumerKey) from the response - Add a new credential to the new Apigee app using Curity's

client_idand some random secret - Delete the randomly generated credential that was created in the first API call

- Create the app and get the Apigee-generated

The result of this call is a standard DCR response from Curity, and the portal is oblivious to the sideways calls to the Apigee-specific management APIs.

As an example, the portal may send this request to create a new app:

POST /client-registration HTTP/1.1Host: myco-myorg.apigee.netContent-Type: application/jsonAuthorization: Bearer 96a31582-5239-49c4-b97f-cc9b406fabd3{"software_id": "dynamic-client-template","developer": "helloworld@apigee.com"}

In the listing above, /client-registration is the DCR proxy. In this case, the new client is being created from a template of one called dynamic-client-template, so the software_id, together with Apigee's developer ID, is all that is sent in. The access token in the Authorization header is the "initial access token" obtained from Curity after authenticating the user or the client (as applicable for the client template); this is a nonce token with the dcr scope, as described more fully in this video tutorial.

The response would be something like the following:

{"grant_types": ["authorization_code","refresh_token","implicit"],"subject_type": "public","default_max_age": 120,"redirect_uris": ["https://localhost:8000/cb"],"client_id": "f9e0d55d-bbec-4f61-913a-cc23685b06e9","token_endpoint_auth_method": "client_secret_basic","software_id": "dynamic-client-template","client_secret_expires_at": 0,"scope": "openid","client_secret": "LnNIPqzaEIpTXDrDM3hFtQrER32dF0BQD2pZhyfNvRA","client_id_issued_at": 1512475106,"client_name": "dynamic-client-template","response_types": ["code","token","id_token"],"refresh_token_ttl": 3600,"id_token_signed_response_alg": "RS256"}

The important part of the response (with regard to the Apigee integration) is the client_id. This needs to be registered with Apigee for it to properly report on API usage. For this reason, the sideways calls to the Apigee management API mentioned above must be made.

Apigee Policy for the client-registration Proxy

Registering the client app in Apigee starts by extracting the developer ID from the request using an ExtractVariables policy:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><ExtractVariables name="Extract-Developer"><DisplayName>Extract Developer</DisplayName><JSONPayload><Variable name="developer"><JSONPath>$.developer</JSONPath></Variable></JSONPayload><Source>request</Source></ExtractVariables>

This extracts the developer ID from the request using a JSON path. Next, the API proxy extracts the client_id and client_name from Curity's DCR response using another ExtractVariables policy:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><ExtractVariables name="Extract-Dynamic-Client-ID"><DisplayName>Extract Dynamic Client ID</DisplayName><IgnoreUnresolvedVariables>false</IgnoreUnresolvedVariables><JSONPayload><Variable name="clientId"><JSONPath>$.client_id</JSONPath></Variable><Variable name="clientName"><JSONPath>$.client_name</JSONPath></Variable></JSONPayload><Source>response</Source></ExtractVariables>

Here, it's operating on the response coming from the DCR call to Curity, not the request (as denoted in the Source element). Curity's response will not include the incoming developer ID because it didn't recognize that input and, consequently, didn't save it and won't echo it. The next step is to generate a request to send to the Apigee app management API to create a new client. This starts by using an AssignMessage policy:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><AssignMessage name="Generate-Apigee-Client-Creation-Request"><DisplayName>Generate Apigee Client Creation Request</DisplayName><AssignTo createNew="true" type="request">apigeeClientRequest</AssignTo><Set><!-- Client name is the template ID followed by the newly generated ID --><Payload contentType="application/json">{"name":"{clientName}-{clientId}"}</Payload><Path>/v1/organizations/{organization.name}/developers/{developer}/apps</Path></Set><IgnoreUnresolvedVariables>false</IgnoreUnresolvedVariables></AssignMessage>

It is important to note that this policy creates the message (by setting the AssignTo element's createNew attribute value to true). This will be used in the subsequent steps with the assumption that it already exists. Because this call is authenticated, the username/password need to be looked up in an encrypted key-value map using a KeyValueMapOperations in this way:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><KeyValueMapOperations name="Apigee-Credentials-Key-Value-Map" mapIdentifier="apigeeCredentials"><DisplayName>Apigee Credentials Key Value Map</DisplayName><ExpiryTimeInSecs>300</ExpiryTimeInSecs><Get assignTo="private.apigeeClientId" index="1"><Key><Parameter>id</Parameter></Key></Get><Get assignTo="private.apigeeClientSecret" index="1"><Key><Parameter>secret</Parameter></Key></Get></KeyValueMapOperations>

By using this approach, the Apigee credentials are stored safely in a vault; this idiom is used multiple times in the integration. With these saved in the private.apigeeClientId and private.apigeeClientSecret variables, the apigeeClientRequest object can be updated to include an Authorization header that contains these credentials using a BasicAuthentication policy like this one:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><BasicAuthentication name="Apigee-Basic-Authentication"><DisplayName>Apigee Basic Authentication</DisplayName><Operation>Encode</Operation><IgnoreUnresolvedVariables>false</IgnoreUnresolvedVariables><User ref="private.apigeeClientId"/><Password ref="private.apigeeClientSecret"/><AssignTo>apigeeClientRequest.header.authorization</AssignTo></BasicAuthentication>

Having done this, the first request is ready to be made to the Apigee management API using a ServiceCallout policy:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><ServiceCallout name="Create-Apigee-App"><DisplayName>Create Apigee App</DisplayName><Request clearPayload="false" variable="apigeeClientRequest"/><Response>apigeeClientResponse</Response><HTTPTargetConnection><URL>https://api.enterprise.apigee.com</URL></HTTPTargetConnection></ServiceCallout>

The request will look like this on the wire:

POST /v1/organizations/myco-myorg/developers/helloworld@apigee.com/apps HTTP/1.1Host: api.enterprise.apigee.comContent-Type: application/jsonAuthorization: Basic anJhbmRvbTpPcGVuIFNlc2FtZQ=={"name" : "dynamic-client-template-5286F144-86C7-42BE-AC24-16F7C04A1837"}

Assuming this was successful, the response will be JSON like this:

{"appId": "9d9bb3c0-7a4f-4dcb-af39-74a609448089","attributes": [],"createdAt": 1512473362196,"createdBy": "jrandom@curity.io","credentials": [{"apiProducts": [],"attributes": [],"consumerKey": "4Fw8tjriEbGxtCQvjD90XsqGVNUhK5rt","consumerSecret": "q8eqcC0Yw273AbO1","expiresAt": -1,"issuedAt": 1512473362247,"scopes": [],"status": "approved"}],"developerId": "78b28f13-379b-454e-8f2b-bf1065884be5","lastModifiedAt": 1512473362196,"lastModifiedBy": "jrandom@curity.io","name": "dynamic-client-template-5286F144-86C7-42BE-AC24-16F7C04A1837","scopes": [],"status": "approved"}

The Apigee-generated client ID (referred to as consumerKey and contained in the credentials array) and client secret (also in the credentials array and referred to as consumerSecret) need to be extracted from the apigeeClientResponse object for later use:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><ExtractVariables name="Extract-Generated-Client-ID"><DisplayName>Extract Generated Client ID</DisplayName><JSONPayload><Variable name="consumerKey"><JSONPath>$.credentials[0].consumerKey</JSONPath></Variable><Variable name="consumerSecret"><JSONPath>$.credentials[0].consumerSecret</JSONPath></Variable></JSONPayload><Source clearPayload="false">apigeeClientResponse</Source></ExtractVariables>

These JSON path expressions save these values for use in the third API call. To prepare for the second call where the client ID from Curity will be added to this new Apigee app, the apigeeClientRequest is reused and updated with a new payload and path as we make the service call out:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><ServiceCallout name="Set-Client-ID"><DisplayName>Create Client ID</DisplayName><Request clearPayload="false" variable="apigeeClientRequest"><Set><!-- Set the client ID to Curity's but keep using the random secret because no one will ever use it anyway --><Payload contentType="application/json">{"consumerKey":"{clientId}","consumerSecret":"{consumerSecret}"}</Payload><Path>/v1/organizations/{organization.name}/developers/{developer}/apps/{clientName}-{clientId}/keys/create</Path></Set></Request><Response>apigeeClientResponse</Response><HTTPTargetConnection><URL>https://api.enterprise.apigee.com</URL></HTTPTargetConnection></ServiceCallout>

Note how this effectively includes an AssignMessage in the Request tag. This is done for brevity's sake. When this callout is made, the resulting second request looks like this on the wire:

POST /v1/organizations/myco-myorg/developers/helloworld@apigee.com/apps/dynamic-client-template-5286F144-86C7-42BE-AC24-16F7C04A1837/keys/create HTTP/1.1Host: api.enterprise.apigee.comContent-Type: application/jsonAuthorization: Basic anJhbmRvbTpPcGVuIFNlc2FtZQ=={"consumerKey": "f9e0d55d-bbec-4f61-913a-cc23685b06e9","consumerSecret":"q8eqcC0Yw273AbO1"}

The consumerSecret is irrelevant but required, so the generated one from the first API call is used. Lastly, the generated client ID and secret can be removed. This is done by updating the apigeeClientRequest again to not have a payload, a different URI, and an HTTP verb of DELETE (done again by smuggling the AssignMessage into the ServiceCallout):

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><ServiceCallout name="Delete-Generated-Client-ID"><DisplayName>Delete Generated Client ID</DisplayName><Request clearPayload="false" variable="apigeeClientRequest"><Remove><Payload>true</Payload></Remove><Set><Path>/v1/organizations/{organization.name}/developers/{developer}/apps/{clientName}-{clientId}/keys/{consumerKey}</Path><Verb>DELETE</Verb></Set></Request><Response>apigeeClientResponse</Response><HTTPTargetConnection><URL>https://api.enterprise.apigee.com</URL></HTTPTargetConnection></ServiceCallout>

On the wire, this looks like this:

DELETE /v1/organizations/myco-myorg/developers/helloworld@apigee.com/apps/dynamic-client-template-5286F144-86C7-42BE-AC24-16F7C04A1837/keys/4Fw8tjriEbGxtCQvjD90XsqGVNUhK5rt HTTP/1.1Host: api.enterprise.apigee.comAuthorization: Basic anJhbmRvbTpPcGVuIFNlc2FtZQ==

The result of this is a 200, OK, with the deleted consumerKey and consumerSecret in the body as JSON. This is disregarded as it's not important or needed.

The sequence of these steps is shown in the following TargetEndpoint proxy policy. Note that the path in the target, /client-registration, is the configured DCR endpoint of the Curity Identity Server.

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><TargetEndpoint name="default"><PostFlow name="PostFlow"><Request><Step><Name>Extract-Developer</Name></Step></Request><Response><Step><Name>Extract-Dynamic-Client-ID</Name></Step><Step><Name>Generate-Apigee-Client-Creation-Request</Name></Step><Step><Name>Apigee-Credentials-Key-Value-Map</Name></Step><Step><Name>Apigee-Basic-Authentication</Name></Step><Step><Name>Create-Apigee-App</Name></Step><Step><Name>Extract-Generated-Client-ID</Name></Step><Step><Name>Set-Client-ID</Name></Step><Step><Name>Delete-Generated-Client-ID</Name></Step></Response></PostFlow><HTTPTargetConnection><LoadBalancer><Algorithm>RoundRobin</Algorithm><Server name="dcrServer"/></LoadBalancer><Path>/client-registration</Path></HTTPTargetConnection></TargetEndpoint>

The net result of this is a standards-based API that can be used from a developer portal or any other system which causes OAuth clients to be registered in the Curity OAuth server and the Apigee API gateway. With this client, we can now obtain tokens from Curity and present them to an API that is fronted by Apigee.

Enabling DCR in the Curity Identity Server

For the above proxy to be able to register new client instance in Curity, DCR has to be enabled on the OAuth profile. This configuration is include in the sample configuration available from the customer support portal. If you are not using that configuration as your starting point or would like to set it up yourself, the steps to do so are described in the Dynamic Client Registration video.

Token Issuance

The Curity Identity Server is a very advanced OAuth server. It supports all of the core OAuth and OpenID Connect flows, like the implicit, code, and hybrid flows. It also supports many lesser-known flows, like the device flow and revocation, as well as non-standard ones like token exchange and the assisted token flow. This list of supported message exchange patterns is increasing with each release. Because of the broad range of interaction patterns that the Curity Identity Server supports, it is not possible for Apigee to proxy Curity. Even setting that aside and speaking architecturally: Apigee should not be involved in token issuance. Its role is an API gateway or policy enforcement point; it has no hand in token issuance, so it should not be in the middle of any of these flows. This separation of duties is important for building a highly cohesive API platform where the roles of the entities are clearly defined, and their interactions are based on well-defined contacts, preferably ones based on open standards (like DCR and introspection, described below).

Due to the way that Apigee performs API usage reporting, however, this separation presents a problem: unless Apigee knows about tokens that are issued, it cannot fully report on the usage of an API; it can provide information about which APIs are called, but not which apps or clients are calling them. API reporting cannot be done by simply including an "analytics" policy in a proxy that takes an OAuth client ID. If it could, the integration would very straightforward; since it cannot, however, Apigee must be informed about all tokens that the Curity Identity Server issues.

To overcome this constraint, an integration is needed that meets two very important requirements:

- Token issuance cannot be delayed by any communication with Apigee. A delay of even 500 ms would effect the user's perception of how long it takes to log into an app. This is not acceptable.

- The tokens that are validated by Apigee should not be the same ones that a client presents to the API proxy since their only purpose is for Curity/Apigee integration. By using different tokens for client API access and Curity/Apigee integration the well-known security principle of least privileged is adhered to.

To integrate Curity and Apigee in a way that overcomes the above mentioned constraint and satisfies these requirements is shown in the following figure:

This flow begins when an OAuth client app requests a token from the Curity Identity Server using any of the supported flows that cause a token to be issued. As soon as this happens, the caller is given its tokens and the flow, from the app's perspective, is done. At the same time, an event is published whenever a token is issued. To catch this, an event listener is configured in Curity. When it does, the event listener calls another API proxy in Apigee. This proxy simply "registers" the token with Apigee. This is done using Apigee's OAuthV2 policy with an Operation of type GenerateAccessToken. For this to work, the OAuthV2 policy has an ExternalAuthorization element with a value of true and the well-known variable oauth_external_authorization_status is also set to true.

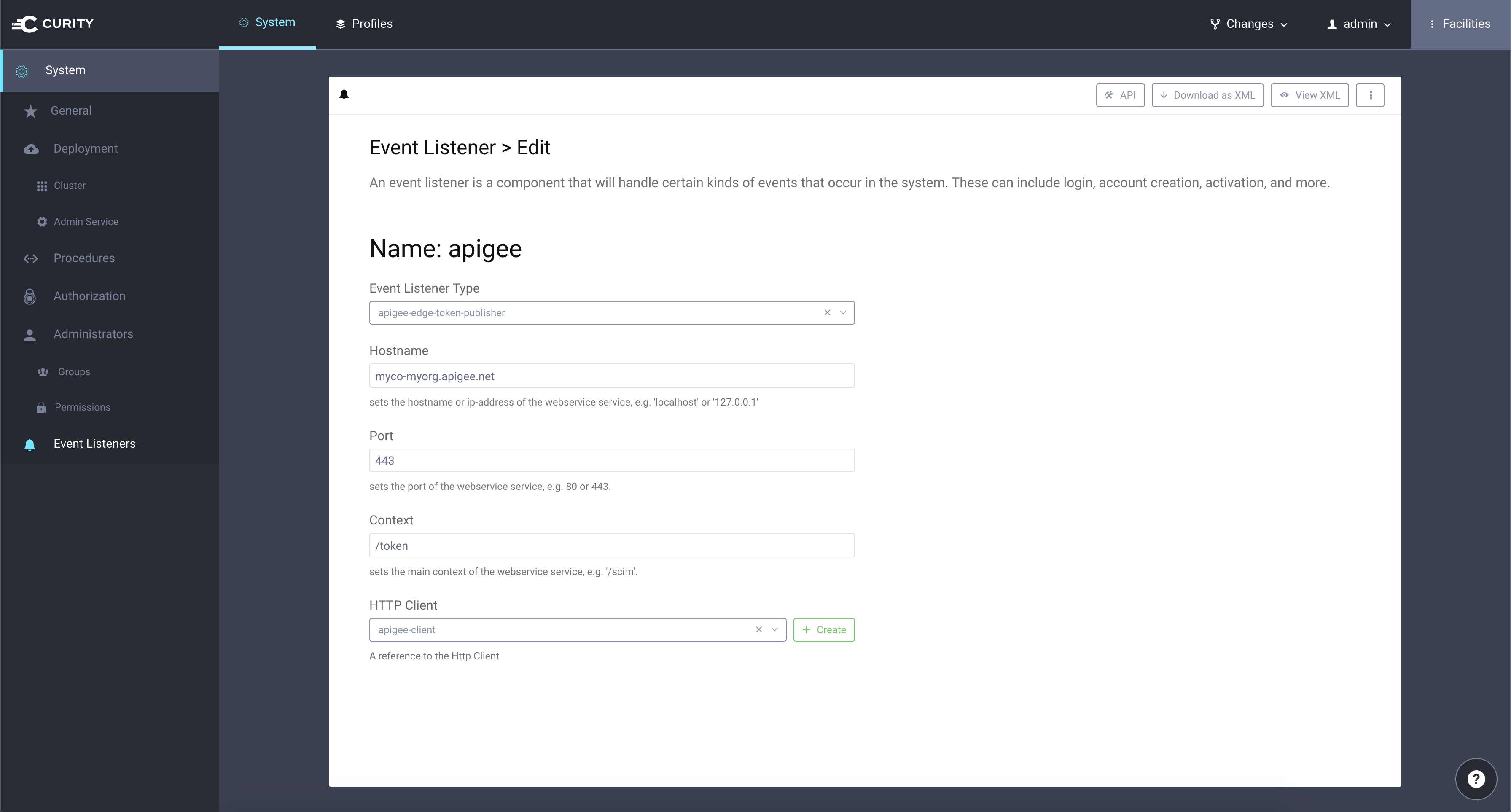

Configuring the Event Listener in Curity

Install and configure the Apigee Token Publisher plugin.

In the context, enter the URI of the Apigee proxy that will store the token (described below). A good suggestion is /token.

The plugin needs an Http Client to communicate with Apigee.

- In the top right corner of the UI, click the Facilities button.

- Next to HTTP Clients click the + button.

- In the Create Http Client dialog box, enter a name (e.g.,

apigee-client) and click the Create button. - In the HTTP Authentication drop-down, select

http-basic-authn. - Enter some username that Curity will use to authenticate to the Apigee token proxy that will be created laster (e.g.,

curity). - Enter a password or click the Generate button[1]to create a random password.

- Enter a scheme; the default of

httpsshould be used except for testing and experimenting. - Click the Close button. The Create HTTP Client dialog will close and you will be back at the page where you were creating the new event listener.

- From the HTTP Client drop-down list, select the new HTTP client.

- From the Changes menu, click Commit. Optionally enter a comment and click OK to make the new configuration active.

That's it. If DCR is already setup, there is no other configuration in Curity required to integrate with Apigee!

Apigee token Proxy

The event listener defined above needs to "register" or store tokens with Apigee, so that it can later validate them when APIs are invoked. Again, this is required, so that Apigee can report on information about which client app called which API. Because Apigee doesn't need the actual token that is used for API security and simply needs to know which app the token is associated with, the delegation ID is used. To receive this and store it, a new API proxy must be defined. Unlike the client registration proxy described one, this one will not have a target (i.e., it won't call a back-end API); it will be entirely self-contained. The request that it will receive will look like this:

POST /token HTTP/1.1Host: myco-myorg.apigee.netContent-Type: application/x-www-form-urlencodedAuthorization: Basic Y3VyaXR5OmN1cml0eQ==Content-Length: 139client_id=f9e0d55d-bbec-4f61-913a-cc23685b06e9&token=4a76e888-6a26-4744-a36e-93e5e2f424bf&scope=openid&grant_type=client_credentials

The grant_type will always be client_credentials, even when that isn't the grant type (i.e., flow) being used in Curity; this will not effect Apigee's reporting, so you can ignore this input value. The important one is the token. This has to be saved using an OAuthV2 policy. Before we get to that point though, authentication of the Curity client must be performed. This done by adding a BasicAuthentication policy as the first one in the API proxy's pre-flow procedure, like this:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><BasicAuthentication name="Decode-Basic-Authentication-Credentials"><DisplayName>Basic Authentication</DisplayName><Operation>Decode</Operation><User ref="oauthServerId"/><Password ref="oauthServerSecret"/><Source>request.header.Authorization</Source></BasicAuthentication>

To check that the provided credentials are correct, the correct values should be looked up in an encrypted key-value mapping defined in the environment where the API proxy is deployed. This is done using a policy like this:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><KeyValueMapOperations name="Get-Encrypted-OAuth-Server-Credentials" mapIdentifier="oauthServerCredentials"><Get assignTo="private.oauthServerId" index="1"><Key><Parameter>id</Parameter></Key></Get><Get assignTo="private.oauthServerSecret" index="1"><Key><Parameter>secret</Parameter></Key></Get><Scope>environment</Scope></KeyValueMapOperations>

If the credentials are wrong, an access denied fault should be raised using a RaiseFault operation like this:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><RaiseFault name="Access-Denied-Fault"><DisplayName>Access Denied Fault</DisplayName><FaultResponse><Set><StatusCode>401</StatusCode><Payload contentType="text/plain">Access Denied</Payload><ReasonPhrase>Access Denied</ReasonPhrase></Set></FaultResponse></RaiseFault>

If the credentials are acceptable, however, the well-known variable oauth_external_authorization_status should be set to true using an AssignMessage policy like this:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><AssignMessage name="Assign-OAuth-Variables"><DisplayName>Set Mandatory variables to use external authorization</DisplayName><AssignVariable><Name>oauth_external_authorization_status</Name><Value>true</Value></AssignVariable></AssignMessage>

Once that is done, the final step is to generate the token in Apigee like this:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><OAuthV2 name="OAuth-v20-GenerateAccessToken"><DisplayName>OAuth GenerateAccessToken</DisplayName><ExternalAccessToken>request.formparam.token</ExternalAccessToken><ExternalAuthorization>true</ExternalAuthorization><Operation>GenerateAccessToken</Operation><StoreToken>true</StoreToken><ExpiresIn>-1</ExpiresIn><Scope>request.formparam.scope</Scope><SupportedGrantTypes><GrantType>client_credentials</GrantType></SupportedGrantTypes></OAuthV2>

The Operation is set to GenerateAccessToken and the ExternalAuthorization value is true. These are very important and, as described in the Apigee docs, things will not work otherwise. This policy also find Curity's delegation ID in the variable request.formparam.token which is then saved as the "Apigee token". It also associates this token with the scopes that were approved by the user in Curity; it does this by referring to a variable, request.formparam.scope, where the Curity event listener put the allowed scopes. The expiration time of this token is infinite (denoted by the -1 value of the ExpiresIn element) since the actual token lifetime is managed by Curity. The supported grant-types are restricted to client_credentials since that is all Curity will send.

These steps are shown in the following listing:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><ProxyEndpoint name="default"><PreFlow name="PreFlow"><Request><Step><Name>Decode-Basic-Authentication-Credentials</Name></Step><Step><Name>Get-Encrypted-OAuth-Server-Credentials</Name></Step><Step><Condition>(oauthServerId != private.oauthServerId) or (oauthServerSecret != private.oauthServerSecret)</Condition><Name>Access-Denied-Fault</Name></Step><Step><Name>Assign-OAuth-Variables</Name></Step><Step><Name>OAuth-v20-GenerateAccessToken</Name></Step></Request><Response/></PreFlow><PostFlow name="PostFlow"><Request/><Response/></PostFlow><Flows/><HTTPProxyConnection><BasePath>/token</BasePath><Properties/><VirtualHost>default</VirtualHost></HTTPProxyConnection><RouteRule name="noroute"/></ProxyEndpoint>

With this proxy, the Curity event listener, and the client registration proxy, we're almost done. We just have to do one thing: call the API!

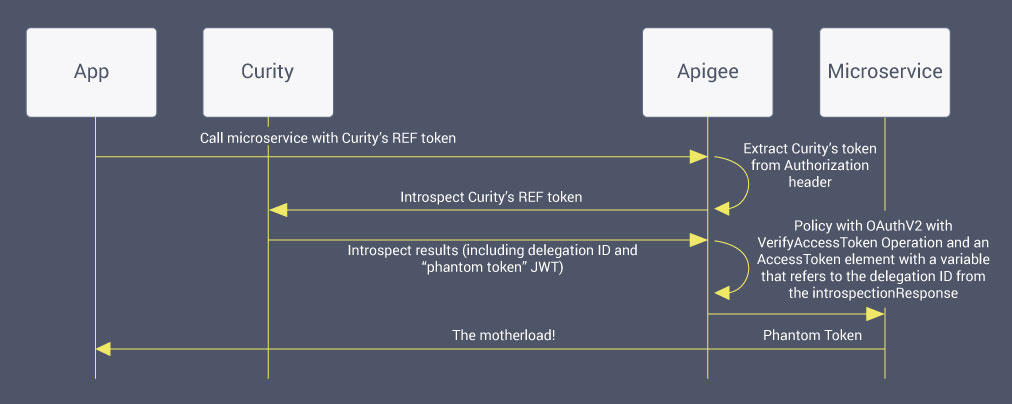

Token Validation

When the API is called, we need to:

- Check that a token is provided and valid by introspecting it;

- Save the information about the calling client for reporting purposes;

- Cache the introspection results; and

- Forward the internal JWT token (or phantom token) to the back-end API.

Note that the introspection procedure used in Curity needs to be updated in order for the phantom token to be presented to Apigee. A detailed description of this is outlined in the Introspection and Phantom Tokens tutorial.

The procedure to do this is shown in the following figure:

It is common to have many APIs, so it is advisable to bundle up these tasks into a reusable policy. This can be done in Apigee by making a shared flow.

Token Introspection Shared Flow

The shared introspection flow has many conditional steps. These are all described below, but, by way of overview, the entire shared flow is shown in the following listing:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><SharedFlow name="default"><Step><Name>Extract-Access-Token-from-Authorization-Header</Name></Step><Step><Name>Access-Denied-Fault</Name><Condition>clientRequest.accessToken is null</Condition></Step><Step><Name>Lookup-Introspection-Payload</Name></Step><Step><Name>Generate-Introspection-Request</Name><Condition>lookupcache.Lookup-Introspection-Payload.cachehit = false</Condition></Step><Step><Name>Introspection-Credentials-Key-Value-Map</Name><Condition>lookupcache.Lookup-Introspection-Payload.cachehit = false</Condition></Step><Step><Name>Server-Fault</Name><Condition>lookupcache.Lookup-Introspection-Payload.cachehit = false and (private.introspectionClientId is null || private.introspectionClientSecret is null)</Condition></Step><Step><Name>Environment-Settings-Key-Value-Map</Name><Condition>lookupcache.Lookup-Introspection-Payload.cachehit = false</Condition></Step><Step><Name>Introspection-Basic-Authentication</Name><Condition>lookupcache.Lookup-Introspection-Payload.cachehit = false</Condition></Step><Step><Name>Introspect-Callout</Name><Condition>lookupcache.Lookup-Introspection-Payload.cachehit = false</Condition></Step><Step><Name>Process-JSON-Introspection-Response</Name></Step><Step><Name>Extract-Cache-Duration</Name><Condition>lookupcache.Lookup-Introspection-Payload.cachehit = false</Condition></Step><Step><Name>Cache-Introspection-Payload</Name><Condition>cacheDuration</Condition></Step><Step><Name>Scopes-Key-Value-Map</Name></Step><Step><Name>Expired-Or-Invalid-Access-Token-Fault</Name><Condition>introspectionPayload.jwt is null</Condition></Step><Step><Name>Validate-Apigee-Token</Name></Step><Step><Name>Check-Scopes</Name><Condition>!(scopes is null or scopes is empty)</Condition></Step><Step><Name>Invalid-Scopes-Fault</Name><Condition>requiredScopesProvided = false</Condition></Step><Step><Name>Update-Target-API-Request</Name></Step></SharedFlow>

As the first step's name suggests, the shared flow starts by extracting Curity's REF token from the client's request. This is passed to the API according to RFC 6750. This standard says that the token will be presented in an Authorization request header with an authentication method of Bearer followed by the token. This spec is quiet lenient, allowing for any amount of whitespace and casing. This makes it unfeasible to use Apigee's ExtractMessage policy that is otherwise well suited for plucking such things out of messages. For this reason, a JavaScript procedure is used instead:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><Javascript async="false" continueOnError="false" enabled="true" timeLimit="200" name="Extract-Access-Token-from-Authorization-Header"><DisplayName>Extract Access Token from Authorization Header</DisplayName><Properties/><ResourceURL>jsc://extract-access-token.js</ResourceURL><IncludeURL>jsc://shared-functions.js</IncludeURL></Javascript>

This includes a library file, shared-functions.js, which defines certain generic functions that are reused in other JavaScript policies later on in the flow. This auxiliary file's contents are this:

function isBlank(str) {return (!str || /^\s*$/.test(str));}function valueElse(str, def) {return isBlank(str) ? def : str;}function isInt(value) {return !isNaN(value) &&parseInt(Number(value)) == value &&!isNaN(parseInt(value, 10));}

The extract-access-token.js file uses these in the following code:

var authorizationHeaderValue = valueElse(context.getVariable('request.header.authorization'), "");var authorizationHeaderValueParts = authorizationHeaderValue.split(/\s+/);if (authorizationHeaderValueParts.length == 2) {var bearer = authorizationHeaderValueParts[0];if (bearer.toLowerCase() === "bearer") {var token = authorizationHeaderValueParts[1];context.setVariable("clientRequest.accessToken", token);}}

If there is a header that matches the requirements of the standard, this script assign the incoming token to the variable called clientRequest.accessToken. If this is not found, an error is raised by the next step (which has a condition preventing it from being run when clientRequest.accessToken is not null):

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><RaiseFault name="Access-Denied-Fault"><DisplayName>Access Denied Fault</DisplayName><FaultResponse><Set><StatusCode>401</StatusCode><Payload contentType="text/plain">Access Denied</Payload><ReasonPhrase>Access Denied</ReasonPhrase></Set></FaultResponse></RaiseFault>

Next, we need to see if the REF token has been cached. We do this using a LookupCache policy:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><LookupCache name="Lookup-Introspection-Payload"><DisplayName>Lookup Introspected Payload</DisplayName><CacheKey><!-- REF token is used as the cache key --><KeyFragment ref="clientRequest.accessToken"/></CacheKey><Scope>Global</Scope><AssignTo>introspectionResponse.content</AssignTo><CacheLookupTimeoutInSeconds>5</CacheLookupTimeoutInSeconds></LookupCache>

This uses the REF token as the cache key. This cache has a global scope, meaning it is shared across all API proxies. This is done because Curity's REF tokens are unique and thus the data to which such a token refers is also unique. For this reason, there is no need to scope the cache more narrowly. If there is a cache hit, the result will be assigned to the introspectionResponse.content variable and the lookupcache.Lookup-Introspection-Payload.cachehit variable will be set to true. The later is used in many of the Condition expressions in the API proxy shown above to avoid the call to the introspection endpoint. If the REF token isn't cached yet, however, such a call is needed. This takes a few policies to put together and culminates in a sideways service call. To start with, the introspectionRequest is defined:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><AssignMessage name="Generate-Introspection-Request"><AssignTo createNew="true" type="request">introspectionRequest</AssignTo><DisplayName>Generate Introspection Request</DisplayName><Set><FormParams><FormParam name="token">{clientRequest.accessToken}</FormParam></FormParams><Verb>POST</Verb><!-- default: override by changing in the introspectionPath key in the environmentSettings KVM --><Path>/oauth/v2/oauth-introspect</Path></Set></AssignMessage>

Note that the createNew attribute of the AssignTo element is set to true. This "instantiates" the object that will be updated by the following few policies. The shape of this message is defined by RFC 7662. For this reason, the verb is set to POST and the REF token is added to a form post element named token. The default path is defined here but in case the Curity installation doesn't use this value, a setting is looked up in the environmentSettings key-value map using this policy:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><KeyValueMapOperations name="Environment-Settings-Key-Value-Map" mapIdentifier="environmentSettings"><DisplayName>Environment Settings Key Value Map</DisplayName><Get assignTo="introspectionRequest.path" index="1"><Key><Parameter>introspectionPath</Parameter></Key></Get></KeyValueMapOperations>

If this value isn't defined, the default of /oauth/v2/oauth-introspect is used. To override this, the policy can be changed of course, but the setting can also be done in Environment Configuration. (Refer to Appendix 1 for a complete listing of all environment settings used in this tutorial.)

With the endpoint, HTTP verb, and body defined, the last preparation needed before the service call can be made is authentication. This is done using basic authentication by obtaining an encrypted credential from a key-value map and setting it on the introspectionRequest. This begins with a KeyValueMapOperation:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><KeyValueMapOperations name="Introspection-Credentials-Key-Value-Map" mapIdentifier="introspectionCredentials"><DisplayName>Introspection Credentials Key Value Map</DisplayName><ExpiryTimeInSecs>300</ExpiryTimeInSecs><Get assignTo="private.introspectionClientId" index="1"><Key><Parameter>id</Parameter></Key></Get><Get assignTo="private.introspectionClientSecret" index="1"><Key><Parameter>secret</Parameter></Key></Get></KeyValueMapOperations>

If the credential isn't defined, a server error should be the result. This is raised by the following policy:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><RaiseFault name="Server-Fault"><DisplayName>Server Fault</DisplayName><FaultResponse><Set><Payload contentType="text/plain">Server Error</Payload><StatusCode>500</StatusCode><ReasonPhrase>Server Error</ReasonPhrase></Set></FaultResponse></RaiseFault>

Typically, it is defined though, and this extra customization can be skipped to keep the shared flow simple if desirable. Once the credential is found in the introspectionCredential key-value map, the id and secret are stored in the private.introspectionClientId and private.introspectionClientSecret variables, respectively. These are used in the following BasicAuthentication policy:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><BasicAuthentication name="Introspection-Basic-Authentication"><DisplayName>Introspection Basic Authentication</DisplayName><Operation>Encode</Operation><IgnoreUnresolvedVariables>false</IgnoreUnresolvedVariables><User ref="private.introspectionClientId"/><Password ref="private.introspectionClientSecret"/><AssignTo>introspectionRequest.header.Authorization</AssignTo></BasicAuthentication>

Here, the variables are encoded and placed in the introspectionRequest objects' Authorization header, as designated in the AssignTo element. This policy also includes the Basic authentication method in the header value, as it is encoding the variables.

With all of these preparation made, the introspection request is ready to be sent:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><ServiceCallout name="Introspect-Callout"><DisplayName>Introspect Callout</DisplayName><Request clearPayload="true" variable="introspectionRequest"/><Response>introspectionResponse</Response><HTTPTargetConnection><LoadBalancer><Algorithm>RoundRobin</Algorithm><Server name="introspectionServer"/></LoadBalancer></HTTPTargetConnection></ServiceCallout>

This policy uses a target server named introspectionServer which is defined in the environment's settings. The request that is sent is the introspectionRequest that was built up in previous steps. The response is saved in another variable called introspectionResponse. The content field of this object, which is the HTTP body of the introspection response or the previously-cached value, is processed with an ExtractVariables policy that uses a few JSON path expressions to set some additional variables; these are prefixed by introspectionPayload as shown in the following listing:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><ExtractVariables name="Process-JSON-Introspection-Response"><DisplayName>Process JSON Introspection Response</DisplayName><JSONPayload><Variable name="jwt" type="string"><JSONPath>$.phantom_token</JSONPath></Variable><Variable name="scope" type="string"><JSONPath>$.scope</JSONPath></Variable><Variable name="apigeeToken" type="string"><JSONPath>$.delegationId</JSONPath></Variable></JSONPayload><Source>introspectionResponse.content</Source><VariablePrefix>introspectionPayload</VariablePrefix><IgnoreUnresolvedVariables>true</IgnoreUnresolvedVariables></ExtractVariables>

To understand how this works, it can be helpful to observe an example of the introspection response. An elided version looks like this:

{"jwt": "eyJraWQiOiItMzgwNzQ4MTIiLCJ4NXQiOiJNUi1wR1RhODY2UmRaTGpONlZ3cmZheTkwN2ciLCJhbGciOiJSUzI1NiJ9.eyJqdGkiOiJQJDE0Zjg4NzY5LTFjYzgtNDVlNi04NGI4LTNmZGRjZWZkMzgwNSIsImRlbGVnYXRpb25JZCI6IjViMTk5NGM5LTg2ZTAtNDJmMC1iZDM1LWNhNmQ2MTBjMzUzNiIsImV4cCI6MTUxMjU4OTY4OSwibmJmIjoxNTEyNTg5Mzg5LCJzY29wZSI6InJlYWQgb3BlbmlkIiwiaXNzIjoiaHR0cDovL3NwcnVjZTo4NDQzL2Rldi9vYXV0aC9hbm9ueW1vdXMiLCJzdWIiOiJqb2huZG9lIiwiYXVkIjpbImNsaWVudC10d28iLCJjbGllbnQtb25lIl0sImlhdCI6MTUxMjU4OTM4OSwicHVycG9zZSI6ImFjY2Vzc190b2tlbiJ9.OabYEIP_bpjnYC4S9MAjVeC4doAtEyba8MfGj4yNU_tyHxb9nXIxqQA51HWq_oZ0lYGkAE9dfLNifhsMF5tXd_dW2MVW7mYMCixaUZALns6bE1sCdAAkBESHInSlxoRZOnMOqiUD-ZP1FU7olrqYbIa7lwR2p94Fp4aXicOo3TkMtBJceim_Vn6RTTAV6NSHcjWicP4OB7OK5CpCNIiVSEvH57i01TBuEwP3iAvmbkPo08eEBUfuyQjFqMW7458cCTubIk0uv3jZdP8SedGkK1M3ZBMojSYbF92F4sry8bfqcLHbNAwLZOY3Tnhx_02a_HyI-3LolrsGLghkYlmgIQ","scope": "openid","delegationId": "5b1994c9-86e0-42f0-bd35-ca6d610c3536",// ...}

Looking at this and the ExtractVariables policy above, you can see how the internal JWT token is saved in a variable called jwt using the expression $.jwt and likewise for scopes and the delegation ID. This delegation ID or "Apigee token" is the one that was send to the token endpoint from the event listener whenever a token was issued by Curity. It is important to realize that this is the value that was used in the token proxy to registered the token with Apigee using the OAuthV2 policy. Now that we have this delegation ID in the introspection results, we need to connect the dots in Apigee, so it can properly report on the API usage. We do this by using another OAuthV2 policy, this one in the introspection shard flow. Unlike the previous one from the token proxy, however, this one has an Operation element with a value of VerifyAccessToken. The AccessToken element is a variable that refers to the delegation ID that was returned from the introspection results:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><OAuthV2 name="Validate-Apigee-Token"><DisplayName>Validate Apigee Token</DisplayName><Operation>VerifyAccessToken</Operation><AccessToken>introspectionPayload.apigeeToken</AccessToken></OAuthV2>

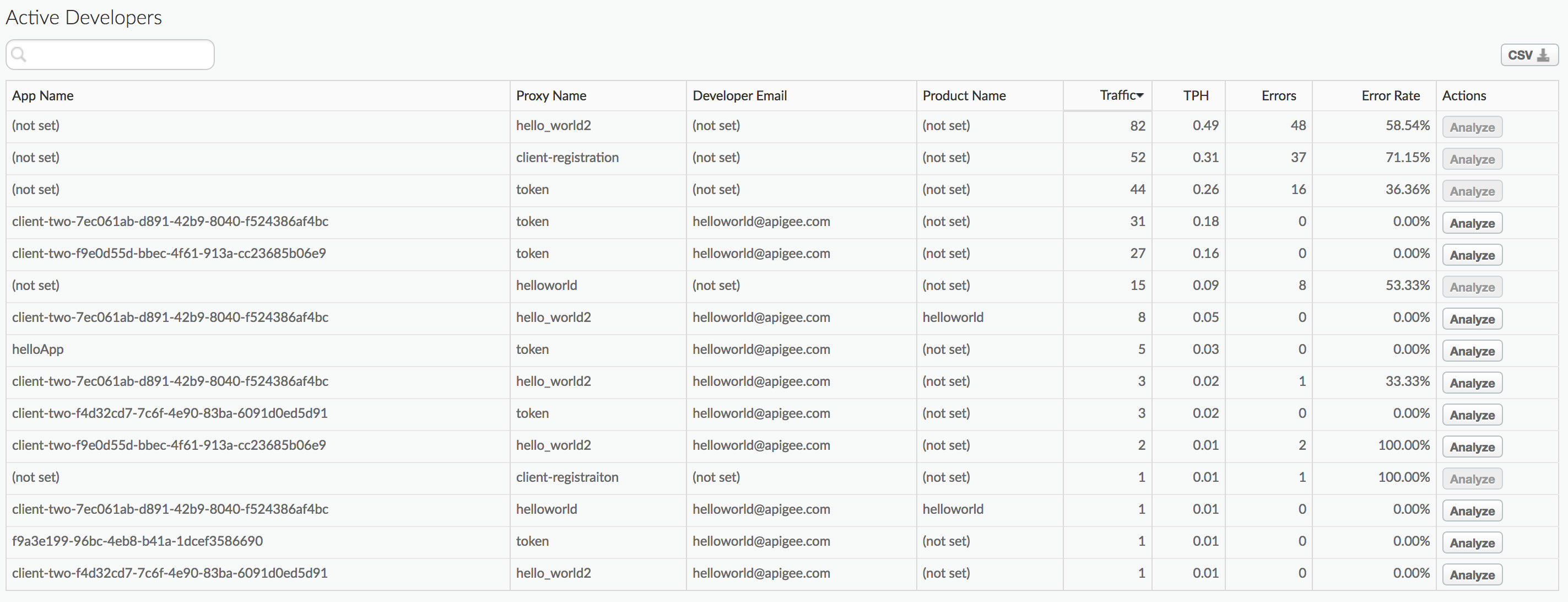

From this, Apigee will know which OAuth client application is calling the API. It will then be able to include this in the analysis of developer engagement.

On the topic of performance, we do not want these calls to Curity to become a bottleneck. To avoid this, we need to cache the introspection results. The LookupCache policy at the start of the proxy needs a corresponding PopulateCache policy. Now that we have made the introspection call, we can add this optimization. To do so, we need to know how long we should cache the introspection results for. This is actually a security decision. The API gateway knows nothing about what is in the phantom token (i.e., the referent identity data), so assigning an expiry would be arbitrary. Consequently, there is a risk that the enforcement point could caching the data for too long. To avoid this, the proxy should use standard HTTP cache headers from Curity's response to determine how long it is safe to store the introspection results. That cache header will look something like this:

Cache-Control: max-age=289, must-revalidate

Apigee should cache the introspection results according to this header's value. To parse out the max-age value, we need another bit of JavaScript. That is invoked using this policy:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><Javascript async="false" continueOnError="false" enabled="true" timeLimit="200" name="Extract-Cache-Duration"><DisplayName>Extract Cache Duration</DisplayName><Properties/><ResourceURL>jsc://extract-cache-duration.js</ResourceURL><IncludeURL>jsc://shared-functions.js</IncludeURL></Javascript>

This uses the shared functions again together with the following extract-cache-duration.js:

var cacheControlHeaderValue = valueElse(context.getVariable('introspectionResponse.header.cache-control'), "");// Header is like: Cache-Control: max-age=201, must-revalidatevar cacheControlHeaderValueParts = cacheControlHeaderValue.split(",");// The default cache duration if Curity doesn't return a Cache-Control header (which it always will,// but we'll err on the safe side).var cacheDuration = null;for (var i = 0; i < cacheControlHeaderValueParts.length; i++) {var cacheControlHeaderValuePart = valueElse(cacheControlHeaderValueParts[i], "").trim();if (cacheControlHeaderValuePart.startsWith("max-age")) {var cacheControlHeaderValuePartParts = cacheControlHeaderValuePart.split("=");if (cacheControlHeaderValuePartParts.length == 2) {cacheDuration = valueElse(cacheControlHeaderValuePartParts[1], "").trim();break;}}}if (isInt(cacheDuration)) {context.setVariable("cacheDuration", cacheDuration);}

This script begins by defensively extracting the header value from the variable introspectionResponse.header.cache-control. If this cannot be found or is found with an invalid value, the introspection results won't be cached. If it can, however, it will store the cache duration in a variable, aptly named cacheDuration. The next step, which is conditional upon this variable being defined, is to actually cache the response from Curity. This is done using the following:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><PopulateCache name="Cache-Introspection-Payload"><DisplayName>Cache Introspeced Payload</DisplayName><CacheKey><!-- REF token is used as part of the cache key --><KeyFragment ref="clientRequest.accessToken"/></CacheKey><!--Can be Exclusive or other values, but those will mean that lookups done by another APIcan't reuse introspection results. Since the internal phantom token has an audience andAPIs will use that to check the applicability, sharing the cache increases throughputwithout degrading security.--><Scope>Global</Scope><ExpirySettings><!-- Extracted from introspection response's cache-control header --><TimeoutInSec ref="cacheDuration"/></ExpirySettings><Source>introspectionResponse.content</Source></PopulateCache>

This uses the same CacheKey as the previous LookupCache policy did for obvious reasons, and the Scope is also Global. The duration of the cache is what was parsed from the Cache-Control response header; this is ensured by referencing that variable in the TimeoutInSec element of the ExpirySettings. The actual value that is cached is the introspection response's body, as denoted by the introspectionResponse.content variable in the Source element. With the introspection call made and the results cached and with the reporting information captured, there are just two steps left: access control and preparing the downstream API call.

Access control is done using scopes. The scopes or rights that the user has delegated to the app can be checked against the requirements of the API. If the API has no specific requirements, the call should be forwarded. If it does, however, and the actual scopes provided are insufficient, then a 403, forbidden, error should be returned; otherwise, the call should be forwarded to the target API. To enforce this, the first thing that needs to be done is to check if there are any scopes set for the API that is being consumed. This can be done by looking up the scopes in a key-value map using the current API as the key:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><KeyValueMapOperations name="Scopes-Key-Value-Map" mapIdentifier="scopes"><DisplayName>Scopes Key Value Map</DisplayName><Get assignTo="scopes" index="1"><Key><Parameter ref="apiproxy.name"/></Key></Get></KeyValueMapOperations>

This key-value map, unlike the ones that store credentials, is not encrypted. Therefore, the variable where these scopes are defined is simply called scopes (as opposed to a variable that is prefixed with private.). To lookup the scopes using the API that has embedded the shared flow, the apiproxy.name variable is used in the Get/Key/Parameter element's ref attribute.

With these scopes in hand, the introspection results are first checked to see if there is a JWT token. If not, it means that the token presented by the client is expired or unknown. When this is the case, we should return a 401, access denied. This is done using a conditional step in the flow:

<Step><Name>Expired-Or-Invalid-Access-Token-Fault</Name><Condition>introspectionPayload.jwt is null</Condition></Step>

This step is a RaiseFault policy that looks like this:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><RaiseFault name="Expired-Or-Invalid-Access-Token-Fault"><DisplayName>Expired or Invalid Access Token Fault</DisplayName><FaultResponse><Set><Headers><Header name="WWW-Authenticate">Bearer realm="{organization.name}", scope="{scopes}", error="invalid_token"</Header></Headers><StatusCode>401</StatusCode><Payload contentType="text/plain">Access Denied</Payload><ReasonPhrase>Access Denied</ReasonPhrase></Set></FaultResponse><IgnoreUnresolvedVariables>true</IgnoreUnresolvedVariables></RaiseFault>

This sets the response status code to 401 and includes error information saying that the token was invalid and what scopes are required in a standard WWW-Authenticate response header. This is done in accordance with RFC 6750. It is important to note that the RaiseFault policy includes a IgnoreUnresolvedVariables element with a value of true. The default if this is omitted is to throw a different error (a server error) when a variable is used that is not defined. The scope variable may not be defined in some cases. When this happens, the access denied error should still be raised. Adding the IgnoreUnresolvedVariables element like this ensures that it will be.

If the token is valid, on the other hand, and some scopes are found, then these are checked against those provided in the introspection results. This is done using our last JavaScript policy:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><Javascript timeLimit="200" name="Check-Scopes"><DisplayName>Check Scopes</DisplayName><ResourceURL>jsc://check-scopes.js</ResourceURL><IncludeURL>jsc://shared-functions.js</IncludeURL></Javascript>

The check-scopes.js script is also quiet defensive and can be run even if no actual scopes are returned from the introspection endpoint. In that case, the requiredScopesProvided variable will be set to true. If some scopes are defined for the currently-running API, however, the list of actual scopes will be checked against the required ones. If any of the required scopes are missing, then the requiredScopesProvided variable will be set to false. The implementation of this algorithm is shown in the following listing:

var requiredScopes = valueElse(context.getVariable("scopes"), "").split(/\s+/);var actualScopes = valueElse(context.getVariable("introspectionPayload.scope"), "").split(/\s+/);var requiredScopesProvided = true;for (var i = 0; i < requiredScopes.length; i++) {var requiredScope = requiredScopes[i];if (isBlank(requiredScope)) {continue;}if (actualScopes.indexOf(requiredScope) === -1) {requiredScopesProvided = false;break;}}context.setVariable("requiredScopesProvided", requiredScopesProvided);

The next step in the flow is conditional upon the requiredScopesProvided variable being false:

<Step><Name>Invalid-Scopes-Fault</Name><Condition>requiredScopesProvided = false</Condition></Step>

If this condition is true (i.e., the API had scopes defined and they were not present in the access token), the following RaiseFault policy will run:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><RaiseFault name="Invalid-Scopes-Fault"><DisplayName>Invalid Scopes Fault</DisplayName><FaultResponse><Set><Headers><Header name="WWW-Authenticate">Bearer realm="{organization.name}", scope="{scopes}", error="insufficient_scope", error_description="The scopes '{introspectionPayload.scope}' is/are insufficient"</Header></Headers><StatusCode>403</StatusCode><Payload contentType="text/plain">Forbidden</Payload><ReasonPhrase>Forbidden</ReasonPhrase></Set></FaultResponse><IgnoreUnresolvedVariables>true</IgnoreUnresolvedVariables></RaiseFault>

This error also conforms to RFC 6750, which states that a 403, forbidden, should be given when an access token is valid but the scopes are insufficient. When that is the case, the required scopes and the error information is provided in the WWW-Authenticate response header, as in the access denied case. A smart app will use this information to obtain a new access token with sufficient scopes. Finally, if the scopes are valid or if none are required, the request will be updated with the internal JWT token. This is done using an AssignMessage policy like this:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><AssignMessage name="Update-Target-API-Request"><DisplayName>Update Target API Request</DisplayName><AssignTo createNew="false" transport="http" type="request"/><Set><Headers><Header name="Authorization">bearer {introspectionPayload.jwt}</Header></Headers></Set></AssignMessage>

This changes the request object's Authorization header to no longer use the REF token but the internal phantom token by referencing the introspectionPayload.jwt variable. This will be either the cached or newly-obtained value. At this point, any API proxy that embeds this shared flow will be prepared to forward the call to its target API or to perform additional access control or message shaping.

Testing

To test the setup, three things should be done:

- A new client app should be dynamically registered.

- Use the dynamic client ID and secret to obtain a token using some enabled flow, like the code flow.

- Using this token, call an API proxy that embeds the shared flow.

Dynamically Registering a New App

To register a new app and test that the first API proxy, client registration, works correctly, a request can be made using curl like this:

$ curl -X POST \http://myco-myorg-prod.apigee.net/client-registration \-H 'authorization: Bearer 813b5dbb-6aeb-41fa-8922-25a0fbeb4460' \-H 'content-type: application/json' \-d '{"software_id": "dynamic-client-template","developer": "helloworld@apigee.com"}'

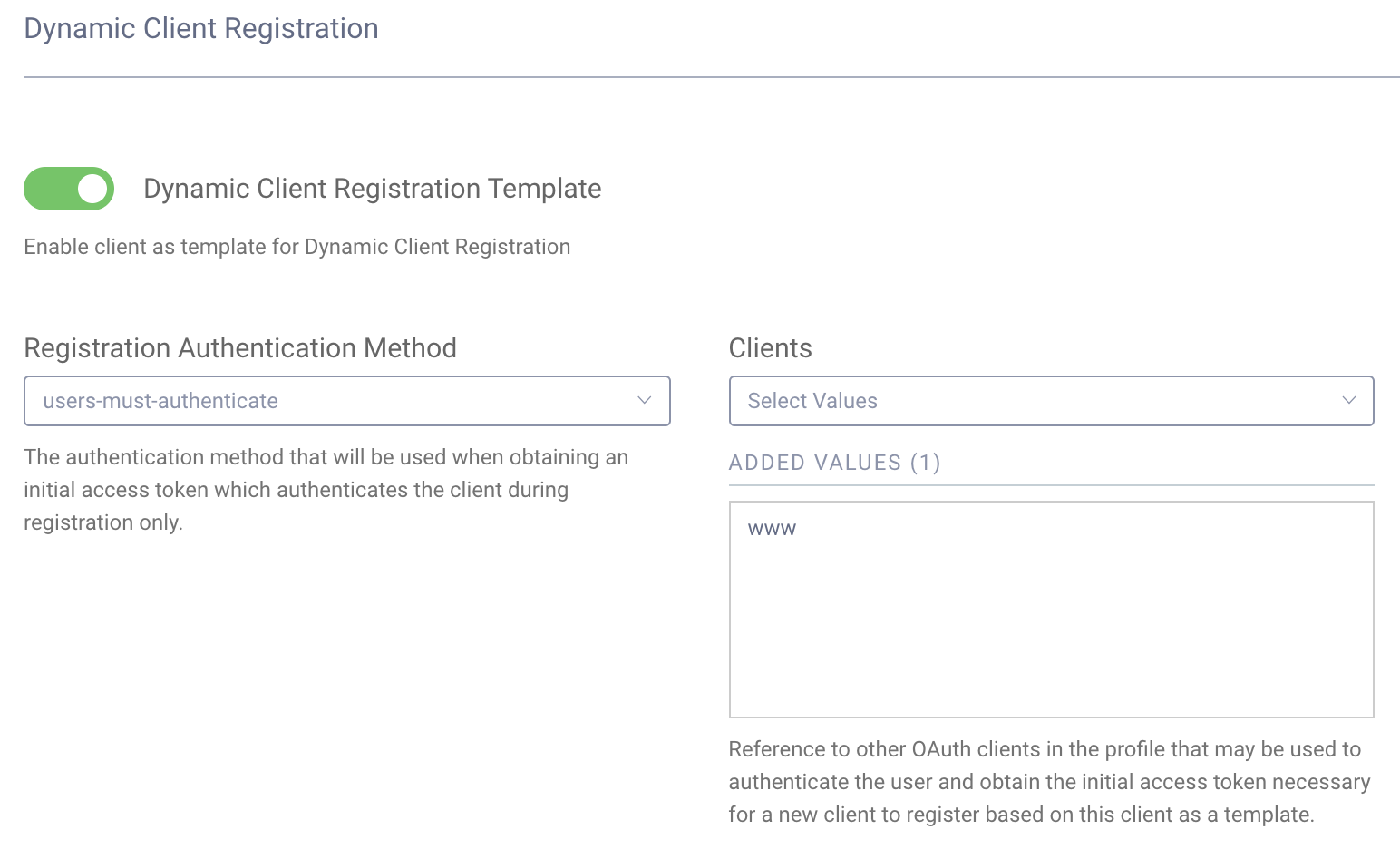

How the access token used to protect this API is obtained will depend on the configuration of the client template. Using the example configuration from the customer support portal, the client template named dynamic-client-template will require the user to authenticate. This can be seen by opening the client template (Profiles → Token Service → Clients → dynamic-client-template). Under the Dynamic Client Registration section of that client, you should see configuration like the following:

If this configuration section is not present, ensure that DCR is enabled on the profile.

Because this is set to user-must-authenticate, the code, implicit, assisted token or Resource Owner Password Credential (ROPC) flow must be used to obtain the access token; which of these is allowed also depends on the client that will authenticate the user. For instance, in the sample config, the client www only has the implicit and code flow capabilities enabled, so assisted token and ROPC cannot be used. The authentication requirements for DCR can also be changed to client-must-authenticate and then the client credential flow can be used to obtain the initial access token used for registration. In any case, this token must have the dcr scope or else Curity will deny the request.

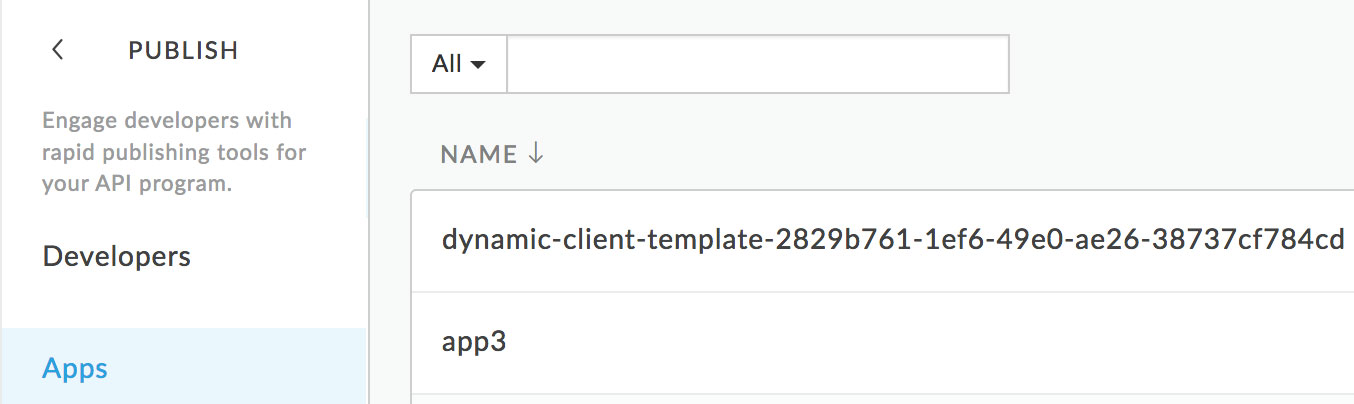

Once an initial access token is obtained and the above curl command (or similar) is executed, the client should be registered. To verify this, the output of curl should be similar to the JSON shown in the second listing. Also, in the Apigee UI, under Publish → Apps, the new app should be shown. The name should be {clientName}-{clientId} as shown in the following screenshot:

If you click this app to drill into it, you'll find a Credentials section with one credential. Clicking the Show button in the Consumer Key column should reveal the dynamically-generated client ID provided by Curity in the DCR response and also output by curl. If you see these things, client registration is working.

Using the Dynamic Client

If the client was created successfully, it should be possible to obtain a token with it. Any of the flows supported by the template will be usable. For instance, if the code flow is enabled (as it is in the sample config), you should be able to start the code flow in a browser by making a request similar to the following (where the client_id, redirect_uri, and scope are the ones returned from the DCR response):

http://localhost:8443/authorize?response_type=code&client_id=f9e0d55d-bbec-4f61-913a-cc23685b06e9&openid&redirect_uri=https://localhost:8000/cb&scope=openid

When this completes and you are redirected to the callback URI, the client ID can be combined with the secret to redeem the code. When this is done and a new access token is issued, using the trace for the token API proxy in Apigee; when the code is redeemed, a call to that policy should be shown and the result should be a 200, OK. If it is and a token is also presented to your client app, then the token registration in Apigee and the event listener in Curity has been setup correctly.

Using and Introspecting the Token

To test the introspection shared flow, you will need to make an API proxy that embeds it. You can do so by making a proxy that targets some sort of echo service (e.g., https://postman-echo.com/get). Add the shard flow to this proxy. You may also add a FaultRule that gives a nicer response if the call to Curity's introspection fails for some reason. A sample proxy flow is shown in the following listing:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><ProxyEndpoint name="default"><FaultRules><FaultRule name="introspection-server-error-rule"><Step><Name>Bad-Gateway-Fault</Name></Step><Condition>introspectionResponse.status.code = 401 ||introspectionResponse.status.code = 403 ||introspectionResponse.status.code = 404 ||introspectionResponse.status.code = 502 ||introspectionResponse.status.code = 503</Condition></FaultRule></FaultRules><PreFlow name="PreFlow"><Request><Step><Name>Instospect-Token</Name></Step></Request><Response/></PreFlow><PostFlow name="PostFlow"><Request/><Response/></PostFlow><Flows/><HTTPProxyConnection><BasePath>/hello_world2</BasePath><Properties/><VirtualHost>default</VirtualHost></HTTPProxyConnection><RouteRule name="default"><TargetEndpoint>default</TargetEndpoint></RouteRule></ProxyEndpoint>

This Bad-Gateway-Fault RaiseFault policy is simply this:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><RaiseFault name="Bad-Gateway-Fault"><DisplayName>Bad Gateway Fault</DisplayName><FaultResponse><Set><Headers/><Payload contentType="text/plain">Bad Gateway</Payload><StatusCode>502</StatusCode><ReasonPhrase>Bad Gateway</ReasonPhrase></Set></FaultResponse></RaiseFault>

This is not required but the following FlowCallout certainly is:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?><FlowCallout name="Instospect-Token"><DisplayName>Instospect Token</DisplayName><SharedFlowBundle>Introspection</SharedFlowBundle></FlowCallout>

This performs all of the introspection steps, including caching and preparing the internal JWT token to be forwarded to the target service. This keeps each API proxy very simple and easy to manage.

Try calling this API proxy. If no access token is included, a 401, access denied, error should result. Add an Authorization request header with a value of zort. This should produce a 401, access denied error as well. Send in a proper access token and you should get a 200, OK, together with some kind of response from the downstream API. As one final test, in Apigee, go to the Environment Configuration section and add the API that has embedded the shared flow to a key-value map named scopes. The name of the entry should match the API's name and the value should be some scope. Start with a scope named foo (which doesn't exist in Curity). Save this and call the API with a valid access token. Instead of getting a reply now, you should get a 403, forbidden. Change the value of the key-value mapping to have some scope or scopes that are in the token (e.g., openid)[2]. Make the call again and you should get a response from the downstream API. Note how the token presented to the downstream API is the phantom token which can then be verified and used without any connection back to Curity, since it is a by-value JWT token.

Conclusion

If you made it through this tutorial, you now have a very secure and scalable API security platform. The combination of Curity and Apigee provides you with:

- A standards-based integration of your API Management System (AMS) and your Identity Management System (IMS).

- An authentication service that can fulfill very advanced login, SSO, MFA, and authentication requirements.

- A purpose-built OAuth and OpenID Connect token service that can perform all of the standard flows plus others in a way that is easy to manage, configure and extend.

- Accurate reporting around developer engagement:

For a more detailed overview of this type of architecture, refer to How to Control User Identity within Microservices on the Nordic APIs blog.

Appendix 1: Apigee Environment Configuration Settings

This tutorial uses various Environment Configurations in Apigee. These are summarized in the following tables.

Key Value Maps

| Key-value Map Name | Key Name | Description |

|---|---|---|

apigeeCredentials | id | The ID/username used to authenticate to the Apigee management API |

secret | The associated secret/password | |

introspectionCredentials | id | The ID/username used to authenticate to Curity's introspection endpoint |

secret | The associated secret | |

oauthServerCredentials | id | The ID/username used to authenticate Curity to Apigee when Curity registers a token at the token API proxy |

secret | The associated secret | |

environmentSettings | introspectionPath | The URI of the introspection endpoint in Curity if /oauth/v2/oauth-introspect is not used |

scopes | Some API proxy's name (e.g., helloworld) | Some space-separated list (e.g., openid read) |

Target Servers

| Name | Host | Port |

|---|---|---|

dcrServer | The host name (without http or https) of the Curity server that provides the DCR endpoint | Typically 8443 |

introspectionServer | The hostname (without http or https) of the Curity server that provides the introspection endpoint; typically the same as dcrServer but can be different | Typically 8443 |

1. This popup may be blocked by your browser if you've never allowed them from the Curity admin UI before. [↩]

2. If you include multiple scopes, separate them by a space. [↩]

Join our Newsletter

Get the latest on identity management, API Security and authentication straight to your inbox.

Start Free Trial

Try the Curity Identity Server for Free. Get up and running in 10 minutes.

Start Free TrialWas this helpful?