Design MCP Authorization for APIs

On this page

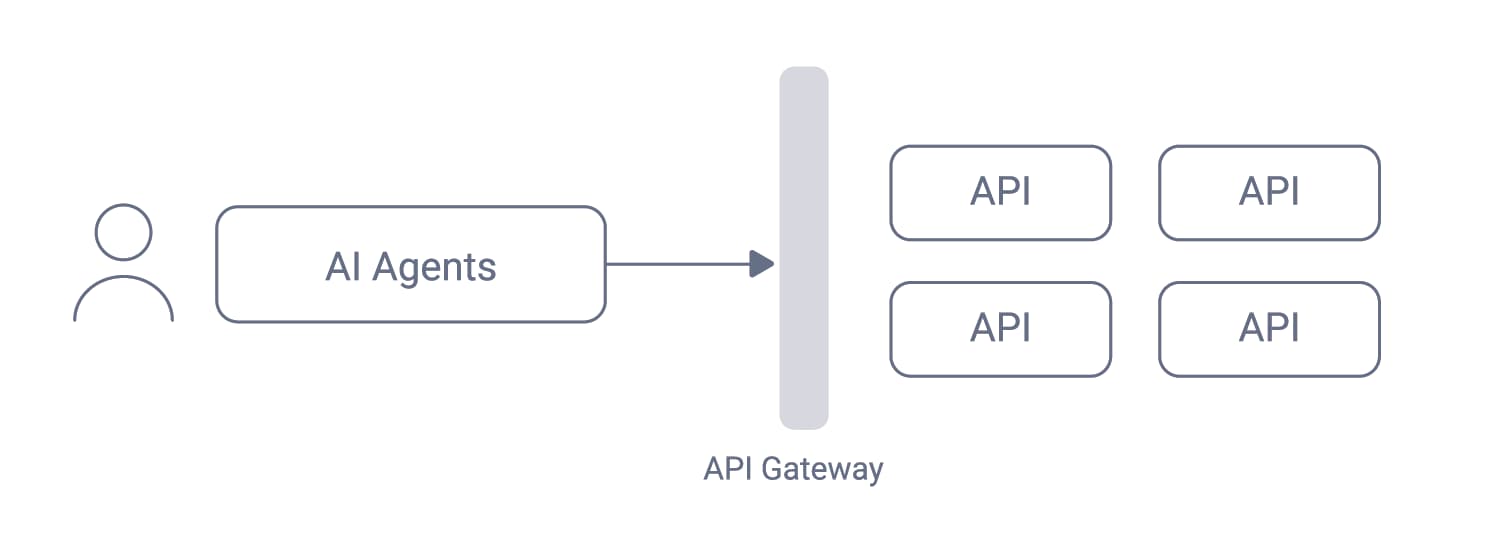

AI Agents and API Access

AI agents provide goal-oriented experiences for users, where a human sets an objective using natural language and the agent can choose actions to fulfill that objective. An AI agent uses a large language model (LLM) to process user input and determine actions. To fulfill the user's objective, these actions can operate on local resources, or call remote resource servers like web APIs.

AI agents could call APIs in many use cases. For example, employees might use AI agents to conveniently access authorized corporate information. For organizations that use APIs to expose and monetize information, AI agents could become a new category of clients that can extend the reach of APIs.

Solutions must support secure API requests from AI agents, so that actions taken on behalf of users are properly authenticated and authorized. This article explains the security building blocks that organizations can use to enable the correct API access. The MCP Authorization Lifecycle article dives into more technical aspects around MCP authorization.

Key Takeaways

Secure MCP-based API access requires least-privilege, OAuth-driven authorization that extends beyond the MCP server.

- MCP authorization secures access between MCP clients and servers but does not replace API-level authorization.

- Use OAuth to authenticate MCP clients and issue short-lived access tokens.

- Issue opaque access tokens to AI agents and MCP clients to reduce data leakage and misuse.

- APIs must validate audience, scopes, and claims to ensure requests are authorized correctly.

- Apply hardened OAuth controls in untrusted or cross-organization environments.

Model Context Protocol

AI technologies evolve rapidly. The Model Context Protocol (MCP) is an example of an AI technology that proposes standardized, interoperable and scalable ways to connect AI agents to data sources and tools. AI agents can use MCP clients that connect to remote MCP servers that provide API entry points.

The MCP authorization specification provides a security design based on the OAuth 2.1 Authorization Framework. OAuth provides an API security solution that can work for MCP clients and also for any other API clients. OAuth provides building blocks to help enable the following behaviors for MCP clients.

- Zero delay onboarding without human actions.

- User onboarding and user authentication.

- Human approval of the areas of data and the operations the MCP clients can access.

- Providing a restricted API message credential (access token) with which MCP clients call APIs.

- Ensuring the correct API authorization to restrict access.

API Architectures

To expose API data to MCP clients, organizations provide MCP servers. These HTTPS entry points must support authenticated API requests and implement authorization policies that align with AI security architecture principles.

MCP servers can be a thin proxy layer, like a utility API, that forwards requests to existing APIs. The following diagram shows an architecture where various types of clients use access tokens to call APIs. Both MCP clients and MCP servers need to use access tokens securely.

AI agents present new challenges when securing API requests, especially in dynamic or untrusted environments. Organizations may have no control over AI agents or MCP clients. When exposing APIs to MCP clients, organizations risk exposing potential sensitive information, like intellectual property (IP), data owned by business partners, customers or users, to unauthorized parties. Therefore it is especially important that APIs correctly authorize access to data before granting AI agents access.

Implementing MCP Authorization

To implement the MCP authorization specification, use an authorization server that provides the standards-based endpoints. Also implement an MCP server that provides resource server entry points. The MCP Authorization Lifecycle article describes the details in depth. The following sections provide some additional recommendations to ensure a secure solution.

Use API Security Best Practices

Before enabling MCP clients to access sensitive data, review the state of existing API security controls. In particular, understand ways to ensure least-privilege access, implement human-in-the-loop constructs and ensure that MCP client access is short-lived, to ensure zero standing privilege. The API Security Best Practices for AI agents summarizes a number of techniques.

Use Opaque Access Tokens

Access tokens should contain information to securely communicate context to APIs, which can then easily adjust their existing authorization. However, AI agents should not be able to read those values. Instead, AI agents should receive opaque access tokens (also called reference access tokens).

The following diagram illustrates a recommended token flow for the connection from an MCP client to the MCP server. The client runs OAuth flows and retrieves an opaque access token that does not reveal any sensitive API information to the MCP client. The API gateway uses the phantom token pattern to deliver a JWT access token to the MCP server. The MCP server validates the JWT and, in particular, it rejects access tokens that do not contain the MCP server's audience. An MCP server could also require particular scopes from MCP clients.

Harden MCP Authorization

The current MCP authorization specification could result in MCP clients and MCP servers that implement only basic authentication and security in OAuth flows. For example, AI agents in user-facing applications might run as public clients that do not store tokens securely, or MCP servers might not verify an MCP client with a high level of assurance. For APIs that expose high worth data, those behaviors could introduce unacceptable risks.

With OAuth, you can go beyond the recommendations in the specification to provide high security ecosystems. For example, organizations in particular industries could use financial-grade techniques to develop high security AI agents and MCP servers using techniques from hardened profiles like Open Banking or other open secure ecosystems.

The following techniques would provide strong security guarantees, where each client and server uses the strongest security to prove its non-human identity (NHI) to recipients.

- Web MCP clients could follow Web Best Practices to use a Backend for Frontend and strong browser defenses.

- Mobile MCP clients could follow Mobile Best Practices and use application attestation.

- Cross-organization DCR could use Client Credentials and Software Statements.

- Cross-organization MCP requests could use Sender-constrained Access Tokens.

Example Deployment

The Implementing MCP Authorization code example provides an end-to-end deployment that uses MCP authorization. Multiple MCP clients can call a stateless MCP server that acts as an entry point to an existing OAuth-secured API. You can run the example on a local computer and connect any standards-based MCP client to the MCP server, to enable secured AI agent access to APIs.

The example shows how to provide a secured MCP server entry point and issue opaque access tokens to MCP clients. The MCP server receives a JWT access token and validates the token to ensure that it meets the MCP server's requirements. The MCP server then invokes MCP tool logic to call the upstream API. The logic uses token exchange to get a new access token with a different audience, suitable for the upstream API.

In particular, the example shows how to use the Curity Identity Server to take control over security at each stage of the MCP authorization flow and issue least-privilege access tokens to MCP clients.

A CIAM Ecosystem

AI agents provide great potential to expose enriched information to users, but they require the use of open standards. To expose data in such an ecosystem, use OAuth standards to control data security. OAuth is a family of specifications for securing APIs at scale, while also considering user privacy and client environments. If you already use OAuth to secure APIs, a thin MCP server layer may be sufficient for your initial AI use cases. If you don't yet use OAuth security, consider security modernization as part of your AI initiatives.

OAuth in a dynamic ecosystem places greater demands on the authorization server. To meet those demands, an authorization server needs Customer Identity and Access Management (CIAM) qualities, with strong support for security standards. You also need extensibility in the important places, like user onboarding and user authentication. Even more importantly, you must be able to issue confidential and least-privilege access tokens.

In summary, securing API requests from AI agents involves a lifecycle that begins with discovery and registration and extends through token issuance and access control across one or more APIs. Following these steps ensures that AI-driven API access remains secure and scalable.

Gary Archer

Product Marketing Engineer at Curity

Join our Newsletter

Get the latest on identity management, API Security and authentication straight to your inbox.

Start Free Trial

Try the Curity Identity Server for Free. Get up and running in 10 minutes.

Start Free TrialWas this helpful?