User Consent Best Practices in the Age of AI Agents

Every day we use systems, applications, and websites for all sorts of purposes — work, personal, entertainment. Many systems are interconnected, where you might use one application to aggregate data and another to modify data.

Think of an application that books a restaurant table that can access your calendar to add a reminder about the booking. Whenever you delegate access from one application to another — especially when they come from different vendors — you want to remain in full control of what the delegate will be able to do in the remote system.

This is a mechanism that we call consent; you give explicit consent as to what an application will be able to do in your name. With the rising popularity of autonomous agents, large language model-powered (LLM-powered) applications that can perform actions on other applications, the need for consent management for AI agents becomes even more important than ever.

What is User Consent and How Does It Empower Users?

In the context of applications and digital systems, consent is the explicit granting of privileges to an application. When a user wants one application to be able to access their data or act on their behalf in another application, they need to acknowledge:

What application is asking to be granted access?

To what application will the access be granted?

What data will the asking application be able to read?

What data will the asking application be able to modify?

For how long will the asking application have this access?

Collecting explicit user consent happens when the two applications in question come from different vendors, what we call “third-party applications” (less commonly, it can also happen when the applications come from the same vendor but are governed by different terms and conditions). When a mobile application from company A wants to access a backend API of that company, it will normally not ask for explicit consent.

The user already granted consent to how the company processes their data when they created an account with company A. When the application wants to access APIs that belong to another company, however, the user should have explicit control over this.

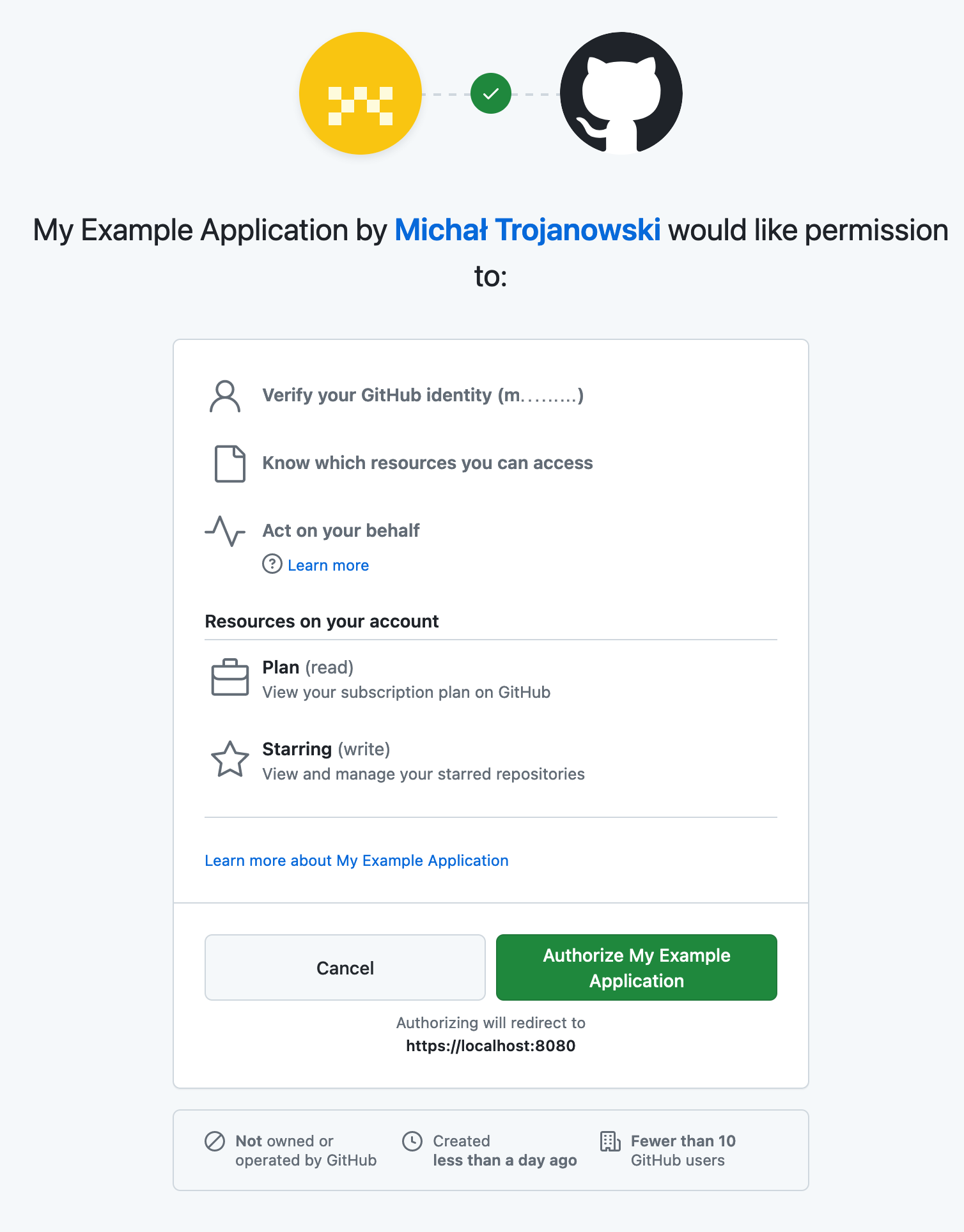

When the user is supposed to grant access, they are presented with a consent screen that should contain all the relevant information needed to make an informed decision. It is a tricky balancing act — the screen should not be cluttered with too much information nor be too vague.

The user should be able to easily identify what application is asking for access and what it will do with their data. Below is an example of a well-designed consent screen used by GitHub:

Clear information on the consent screen also helps prevent impersonation attacks, where a malicious application pretends to be a legitimate one. Furthermore, it allows the user to ensure that they give an application the minimum amount of privileges that it needs to perform its intended tasks.

Very often, consent is asked for only once for a given application. Once you give the application access to a given set of privileges, you will not have to do it again, unless the application wants to change the set of permissions. This means that organizations should allow users to manage the consents they’ve given.

You should be able to browse which applications you granted access to and revoke it if needed, like when you no longer use the application or you have a suspicion that access to that application was compromised.

Enter AI Agents

AI agents are hailed as the next step in the evolution of AI applications. LLM-based applications offer a natural-language interface to query the model for information. They allow you to freely converse with the chatbot. These applications gain the ability to perform actual tasks, like placing orders or bookings, sending emails, or filling in and submitting forms. To achieve that, agents use their ability to invoke preprogrammed “tools.”

There are different approaches to how a tool is implemented in an AI application, but a popular approach means calling an API to read or modify data. As the AI application is calling an API, it means that it becomes that API’s client, with the usual client attributes - it needs credentials to call the API on the user’s behalf.

One of the most popular approaches for applications to gain access to APIs is to use OAuth and access tokens. OAuth is an established standard that supports granting least-privilege access to limit what the application is able to do at the API. OAuth flows, used to obtain tokens, also support the collection of granular user consent for autonomous agents. Applications use scopes to get credentials with limited capabilities, and users can use that information to decide whether to grant or deny access to their data.

This approach can be used in pretty much the same way when working with AI agents.

Consent in the Context of AI Agents

From a purely technical perspective, an AI agent is just another client that requires access to the API — it needs to obtain access tokens to be able to perform actions on the API. However, agents have some important differences from the applications that we are used to operating. When you use a “regular” application, most of the time, it is you who is actually operating it. You click on buttons, enter data into form fields, and select options.

Even if the application or system has parts that are automated, or run in the background, you are pretty confident of the actions that will be undertaken — if you click a button that says “move all files,” you don’t expect the application to start sending emails. When you introduce an AI agent, however, you add a sort of intermediary that is capable of some autonomy.

This means that the agent can decide to perform some actions because it “thinks” it needs to do it to fulfill the task that you originally asked it to do. You might not have total control of the actions that the agent will perform. And sometimes, unfortunately, the actions can meander into an unexpected territory, either because the agent “hallucinated” what it needed to do, or it was accidentally or deliberately tricked through prompt injection to take some dubious actions. Because of this, modern user consent for AI agents must guarantee that users retain full control over any AI-driven action.

This is why you should approach the topic of consent a bit differently when it comes to agents. Firstly, you should treat all agents like third-party applications. This means that the user should give explicit consent whenever delegating access to an AI agent. It allows the user to stay informed of what actions the agent will be able to perform and for how long. This ensures proper user control over AI agent actions and prevents unintended behavior.

The Need for Fine-Grained Permissions

Secondly, the consent should be even more fine-grained when it comes to the privileges the agent receives. Ideally, the agent should receive only the permissions it needs to perform the concrete action that it currently plans to perform — if the agent decided that it needs to check the content of emails and properly label them, then the access token should not allow the agent to send or delete emails. This means that backend systems and APIs should be prepared to operate with a granular approach to authorization.

Time-Limited and Transaction-Based Consent

Finally, you want the consent to always be time-limited, unlike with regular applications. The consent should automatically expire so that the agent is not able to unexpectedly gain access to a system after some time. Even better, the consent should not be persisted and given on a per-transaction basis—the user should be prompted for consent every time the agent asks for a new set of tokens.

Balancing Usability With Security

Following such an approach can mean that the user will have to give their consent numerous times during one session with an agent or even during the processing of a request. This becomes a delicate but important balancing act - the user should not be overwhelmed with the consents, as it raises the risk of blindly approving everything. At the same time, the user should not be limited to giving consent only once, with overprivileged access.

Best Practices for Granting AI Agents Access to APIs

The best practices below outline how you, as a user, should behave when interacting with AI agents. However, to be able to act this way, AI applications vendors and API vendors must ensure that they implement their systems in a way that allows users to adhere to these recommendations. These recommendations describe how users should behave, but serve as a guideline to vendors.

Always Limit the API Privileges of AI Agents

You should employ the rule of least privilege — give the agent the minimum set of permissions to perform the task at hand. This way, you limit any abuse the agent could perform. It’s better to grant the agent permissions numerous times, increasing the privilege if required, than allowing the agent to have over-privileged access to APIs.

Make Sure the User Consent Expires

Ensure that the consent you grant to an agent expires. Ideally, the consent should be valid only for the time of the current request or session. This means that you will have to consent every time the agent needs a new set of tokens to call the API. Note that asking for consent is unrelated to any user session you might have with the token provider.

You shouldn’t have to reauthenticate every time you see the consent screen. Vendors should allow you to choose how long you are granting the consent. For longer-running jobs, or when you know that the agent will constantly work in the background, you might want to grant a longer-living consent.

Use Reconsent Before High Privilege Operations

In some cases, the AI agent may receive an initial access token and then need to perform a higher privilege API operation that is outside the original user’s consent. APIs can deny access and trigger step-up authentication where the user must again grant consent. The AI agent can then be issued an access token with a higher privilege scope that the API accepts, ensuring ongoing consent management for AI-driven actions.

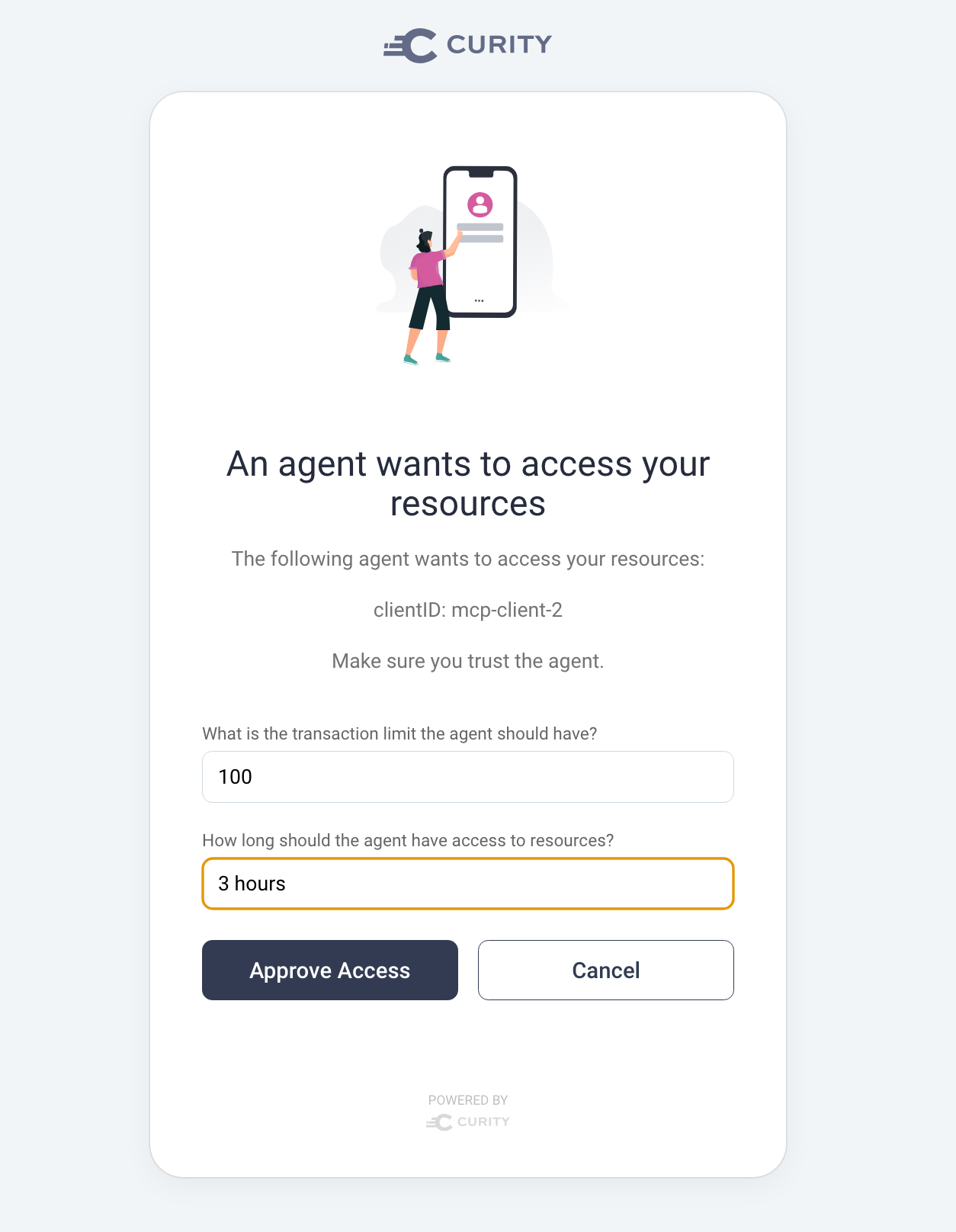

Customize User Consents

Vendors should allow you to tailor the contents of the consent. In some cases, consent can mean that the user grants access but places additional conditions. When the user consents to a scope, claims associated with that scope get issued to access tokens. Some claims can originate from user input, such as values the user inputs into a custom consent form. The following form shows an example where the user consents to a level of access but with a user-defined limit on a transaction and the time of access that an AI agent can use.

Allow Revocation of Long-Lived Consents

If you assign the agent long-lasting access, then make sure that the vendor allows you to manage the consent after granting it. You should be able to revoke the consent at any time, like if you no longer plan to use the agent or if you learn that the agent has been breached or is otherwise faulty. Revoking consent will, in effect, invalidate any refresh tokens, as the agent will not be able to use them to get new access tokens. Ideally, revoking consent should also revoke any active access tokens.

Conclusion

In current systems, the consent screen tends to be lacking — either it is too vague or too detailed, or it does not give the user the necessary options. With the rising popularity of AI agents, it becomes more important than ever to properly acquire the user’s consent — what they allow the agent to do in their name.

This requires careful and informed actions from users, but is only possible if users have the right tools available. Vendors of both backend systems and AI agents should take this into consideration and ensure that their products offer the correct features for the whole ecosystem to remain secure.

Learn more about how Curity helps to secure IAM in the age of AI.